What makes a planet habitable?

Earth, Mars, Venus (of old), and Exoplanets

— an online book to bring a new synthesis to astrobiology…

and a tie-in of many great stories that science has to tell us

Vince Gutschick, 2020-21 – author information at the end

This is a freely available book, simply as a webpage with links. I appreciate your sharing it with anyone who may have an interest in the topics or whose interest just may be piqued by it. Please share any comments via the contact form.

It’s still a work in progress. Some additional figures and images are worth putting in shortly… and your comments may move me to make changes.

My presentation is multi-level. It’s aimed both at readers with scientific or technical backgrounds and at readers who have less background and who can gain from the more step-by-step presentation . I draw on biology, physics, chemistry, geology, engineering, astronomy, and a smattering of social science.

The core topic is, what makes a planet habitable in any of three senses, with a view toward informing a bit more the search for habitable planets. More so, all the digging into the welter of conditions for habitability lets us appreciate Earth’s habitability, unique within an enormous space, as well as ways to keep Earth habitable. Big changes are coming.

The Table of Contents expands here; the span to topics is rather unusual. An index is unnecessary, given the searchability of the webpage. There are over 100 internal and external links, with 56 appendices, sidebars, and supplements whose topics and links show up with a click on another Show More link. Selected summaries pop up under the search term Our luck in the Universe.

————————

Let’s talk about everything that a planet must have to support life – simple life, complex life, our lives. How does everything have to work – the planet’s location, composition, structure, geological processes, even its complement of life? What are the deal-makers and the deal-breakers and the simply very interesting? This certainly will illuminate what makes Earth special and may inform how we can avoid breaking it.

Astrobiology is replete with ideas and bits of real data about the possibility that life exists on other worlds, and the possibility that we might detect it, even the possibility that we might cohabit other worlds with such life (or even without other life, as likely on Mars!). Astrobiology remains fragmented in its basis, not yet pulling together the vast array of human knowledge in many, diverse sciences. I offer ideas here for a more comprehensive foundation. In the end I offer that inquiries into what life may be like elsewhere… if it exists… can tell us a great deal about our own Earthly life… which is the only life we may ever experience firsthand in an enormous Universe deployed over insuperable distances.

I offer this book if you’re curious about other worlds where life may thrive or hang on or expand. I provide it for you to make your own judgment about the proposals, hypotheses, and claims for life on other worlds. If you are a research scientist perhaps looking for additional context, I offer syntheses from physics to chemistry to biology to geology to a bit of social science. If you are a curious and critical thinker outside of science, I present concepts at several different levels, any of which may suit you. To that end and to keep the flow of ideas smooth, I’ve put many intriguing and/or deeper concepts in Appendices and sidebars. On the web they load on demand.

“The Universe is not only queerer than we imagine—it is queerer than we can imagine.”

— Biologist J. B. S. Haldane, 1928

… and that’s still true, even after we imagined and (sort of) assimilated the mind-boggling ideas of black holes, quantum entanglement, ice volcanoes on moons, weird organisms of the past like Hallucinogenia (above, in reconstruction), and more. Now we set ourselves to imagine life on other worlds. We have to avoid simple bias using our one sample of life on Earth to project how life looks, acts, and is set to exist elsewhere. At the same time, we have to use our huge store of knowledge of the principles of physics, chemistry, biology, geology, and cosmology to figure out possible combinations of possibilities. These principles appear to work universally, as far as we’ve tested them. I try to strike that balance here.

… and that’s still true, even after we imagined and (sort of) assimilated the mind-boggling ideas of black holes, quantum entanglement, ice volcanoes on moons, weird organisms of the past like Hallucinogenia (above, in reconstruction), and more. Now we set ourselves to imagine life on other worlds. We have to avoid simple bias using our one sample of life on Earth to project how life looks, acts, and is set to exist elsewhere. At the same time, we have to use our huge store of knowledge of the principles of physics, chemistry, biology, geology, and cosmology to figure out possible combinations of possibilities. These principles appear to work universally, as far as we’ve tested them. I try to strike that balance here.

Hallucinogenia sparsa reconstruction

Dreams of worlds, dreams we can generate

The worlds beyond Earth are exotic, even beyond exotic. One need only see images taken from spacecraft that we, imaginative and clever humans, have sent a billion times farther than our ancestors could conceive. What lies on the planets, what kinds of stars beam in the stellar systems that we now find abundantly, if remotely, with telescopes of stunning capabilities and with creative minds that can assemble elaborate measurements to reconstruct alien stars and alien worlds around them? Might life like ours, or very unlike ours, be found in our galaxy and beyond? If so, has it evolved to any sentient beings? We now can imagine what might be needed for a planet – an exoplanet – to support life. We do have a fund of knowledge beyond what any one of us yet knows about all the pieces – the chemistry of biochemical reactions, the lives of the stars, short or long, the universal workings of quantum mechanics and of physical processes in geology, and far more. We can work to put it together to explore, at a distance, the habitability of a planet.

Artist’s conception: European Space Observatory, M. Kornmesser

Dreams of many people now

The habitability of a planet – ours, Mars, exoplanets in other stellar systems – is a focus or even obsession of many people. As of this writing (2020), billionaires Jeff Bezos, Elon Musk, and Richard Branson all want to travel to Mars or even live there, despite its nearly total lack of “amenities” for life such as us – oxygen in the atmosphere, open water, moderate temperatures, or even a nice sky (rust is not a color we’ve learned to love). Governmental entities in (post-?)industrial nations send probes to Mars to detect signs of life, be it only bacterial, and support research to detect exoplanets and even characterize surface conditions in some of them. Nongovernmental groups, notably SETI, support searches for communication of intelligent extraterrestrial life with us. An almost comparable effort in terms of funds and the time spent by workers is on assessing the future habitability of our own planet, Earth, under the impacts of climate change, land-use change, loss of biological diversity, and diverse, globalized manners of pollution from disposed-of plastics, estrogen mimics in waterways, mine tailings and spills, and more. Perhaps the most productive use of studies of other planets is the insight yielded on Earth’s future habitability with all its constraints. After all, life barely made it on Earth through a number of mass extinctions. “Civilization exists by geological consent,” said Will Durant. For the moment, we have a demonstrably habitable planet, on the land surface and ocean, apparently even in some deep rocks. Some places are “less habitable” than others, meaning they support a lower density of life or (perhaps transiently) a zero density. Think deserts, the low-iron-content Southern Pacific Ocean, active volcanoes. Still, even we humans with our special needs infest the whole habitable Earth and Canada, as Ambrose Bierce quipped in his Devil’s Dictionary. That’s remarkable among all planets that we know, near and far. (“Infest” is an apt choice. As our human population has exploded over the last several millenia, the ratio of wild animal biomass to human-managed livestock biomass has dropped from perhaps 30:1 to only 1:25.)

Why I’m writing this

Actually, one big reason that I wrote this is that it’s fun! It certainly is for me, to put many ideas together and in context, and to tell the great story of Earth and beyond, showing how tiny samples of rock put into an isotope mass spectrometer fit in with the mergers of massive neutron stars, detected with the huge and incredibly sensitive laser gravity interferometers. Science has many stories to tell, fascinating, sobering, inspiring, even all three. At our son’s graduation from Caltech in 2008, commencement speaker Robert Krulwich gave the students the lifelong task to tell the great stories that science has to tell. Science is misunderstood, it’s under attack, and retreating to science as a bastion of truth or understanding serves little purpose. Our human development is based on narrative; people love stories; stories have power.

The stories here are often quantitative, with a mathematical core. I do delve more deeply into physics and attendant math through calculus, series, functions, and such in a number of sidebars and appendices that provided stronger “hooks” to the issues. The story as a whole can be read while glossing over much of the math, but the math is there for the taking when you may wish. There are two sides of math as a metaphorical sword. Galileo Galilei wrote this Discourses in vernacular Italian with only a bit of math coming in late, so that he could reach the public. He also wrote that (in translation), “The logic of the universe is written in the language of mathematics.” Both sides are necessary, story/metaphor and math; I’ve included both, for diverse groups of readers. I hope that you, dear reader, may wish to comment here or on the upcoming website, science-essays.com. I thank you in advance for your thoughts.

This text is intended to put strong limits on the habitability of any planet, including our own; astrobiology, the theoretical study of where life might exist other than on Earth, has a great number of untenable ideas that I comment upon. Our planet is in a remarkable set of circumstances. That’s in many senses – first, cosmologically, in our having heavier elements such as zinc and iodine and selenium to make all the biochemicals in our bodies without a late supernova too near to make those elements. It’s astronomically favorable, in having a benign and long-lived Sun with nice radiation peaking in the range of energies that can drive biochemical reactions but not toast them. It’s geologically favorable, in having a planet that has tectonic forces to create dry land (dolphins may be smart but they don’t have cities or cell phones, for what those are worth) and that has sorted its elements so they’re nicely available to us, and that has water delivered by asteroid impacts before we had to live through any, and much more. I also point out the fragility of life even on Earth, with mass extinctions that our ancestors barely survived; those extinctions are built into the tectonics and mineral cycles of the Earth, which we are changing. I address the hope that we can colonize Mars, with an extensive discussion of the extreme difficulty. The conclusion is readily drawn: fix our treatment of the Earth; there really is no plan(et) B.

I developed this presentation without resource to existing books and similar resources on astrobiology, in order to give an unbiased (I hope) and independent synthesis that I hope may inform the search for life on other bodies, the practicality and value of colonization of Mars, and the insights offered from all such research to delineate how we may preserve the habitability of Earth.

There are lots of ways to ask this question. Is Mars habitable? Do we mean by any of its own life forms (bacteria), if they exist? Or, do we mean that exogenous life forms – specifically, humans – could potentially live there, with much artifice – oodles of technology for life support but eventually self-sustaining? NASA, the ESA, the space agencies of China and India are checking out the first prospect or will do so soon. Dreamers, including the wealthy and very tech-savvy Elon Musk are proposing that the latter is true.

SpaceX Starship model. Daily Sabah

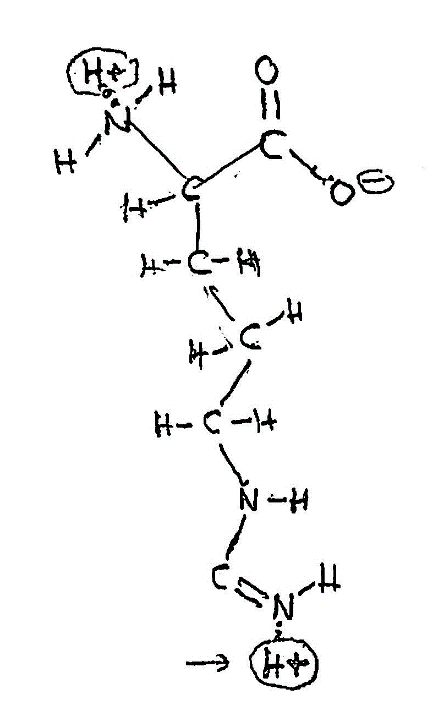

Back to the first definition, that a planet or place on a planet has endogenous life forms: what forms do we want to find? Bacteria might count – elating some evolutionary biologists but making the average person just sigh at the end. We might also seek evidence of more-evolved life forms that are multicellular and whose cells are differentiated for diverse functions – that is, not just colonies of similar cells. If we’re talking about animals, we call these metazoans. There’s no analogous one-word term for plants (and their kin that we don’t really call plants) but we would not count strings of cyanobacteria, even if some end cells are specialized to fix atmospheric nitrogen into usable ammonia.

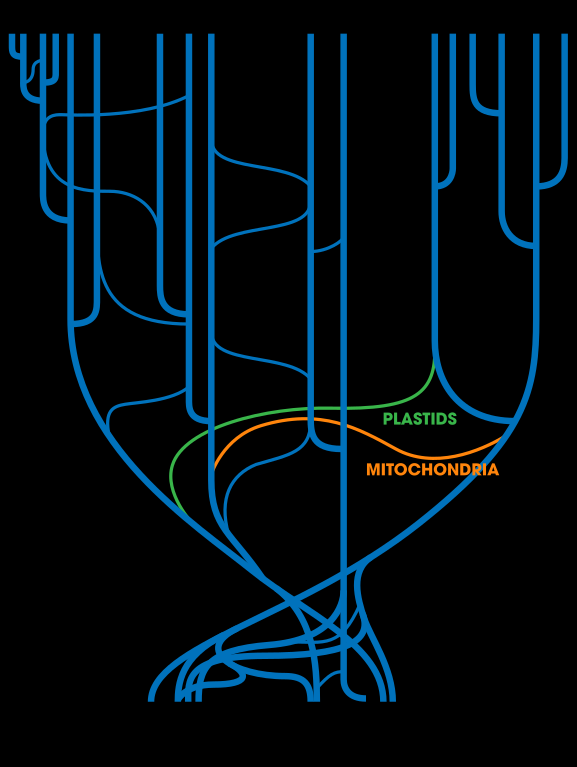

While we’re at it, let’s be open to the idea that the categories of life on another planet are unlikely to fall into our Earthly categories – plant, animal, fungus, bacterium, protist, or the much more accurate and informative distinctions of Bacteria, Eubacteria, and Eukaryota (having cells with a membrane-bound nucleus) and then the elaboration into all the various clades that evolved (organisms that share a common ancestor). That new cladistic view from genetics, plus some fossils gave us some illuminating new views – birds are the surviving dinosaurs; fungi are more closely related to us animals than they are to plants (a reason that our fungal infections are harder to kill off without harming us than are bacterial infections); our own species, Homo sapiens sapiens, once shared the planet with other members of the genus Homo that interbred with us – the tree of life is less a simple tree than an example of cross-linked pipes. On a planet that evolved its own set of complex life forms, is “animal” a valid category, describing organisms that don’t do the primary capture of solar (really, call it stellar) energy but eat others that do and have some power of locomotion to distinguish them from, say, fungi? What if some life forms there do both photosynthesis and eating? Hmm, sound like our own Euglena, but what if they were big and multicellular? (I can argue that this is highly unlikely, but this isn’t the place to elaborate. I did so in my interview that got me my job at Los Alamos!). The tree of life on any planet may be very complex.

Tree of Life: Zmescience.com

Habitability “1.0,” raw habitability is the ability of a place (a planet, part of a planet) to support life of at least one form, even just the simplest. Life has to have to properties of self-replication, growth, and maintenance of function; there are some persistent physicochemical processes that don’t count – the atmospheric cloud eddies over oceanic islands that can spawn one another and that persist or recur don’t count. In any case, we recognize it when we see it (well, if we look at it hard enough – some slow-growers don’t catch the eye; it’s as US Supreme Court Justice Potter Steward said a bit less than helpfully about pornography – [I can’t define it unequivocally] but I know it when I see it.) Even this simple level of habitability requires a concurrence of many conditions of the stellar system (star size, planetary orbit and axial tilt, planetary mass, delivery of water), the astronomical history (making heavy elements with a supernova or neutron star merger safely distant in time), geology (persistence of plate tectonics and magnetic field), chemistry (the right greenhouse effect), and more.

Habitability 2.0 is for complex life forms that depend on each other, creating complex ecosystem interactions – with multiple trophic levels (producers, consumers on several tiers) and functional diversification of life forms (such as 250,000 different plants on Earth). That is, it’s not supporting a range of just bacteria or similar microbes such as Earth had for about 3 billion years. Diversity may offer resilience to the astronomical and geological disturbances that a bound to come – asteroid impacts or episodes of extreme volcanism. Speculation on what kinds of complex life might evolve is impossible now or up to a rather distant future. Still, examining our home planet’s complex life and what preserves it over giga-years serves the purpose of instructing us on maintaining it. It also informs us about the challenges of going to habitability 3.0 on, say, Mars.

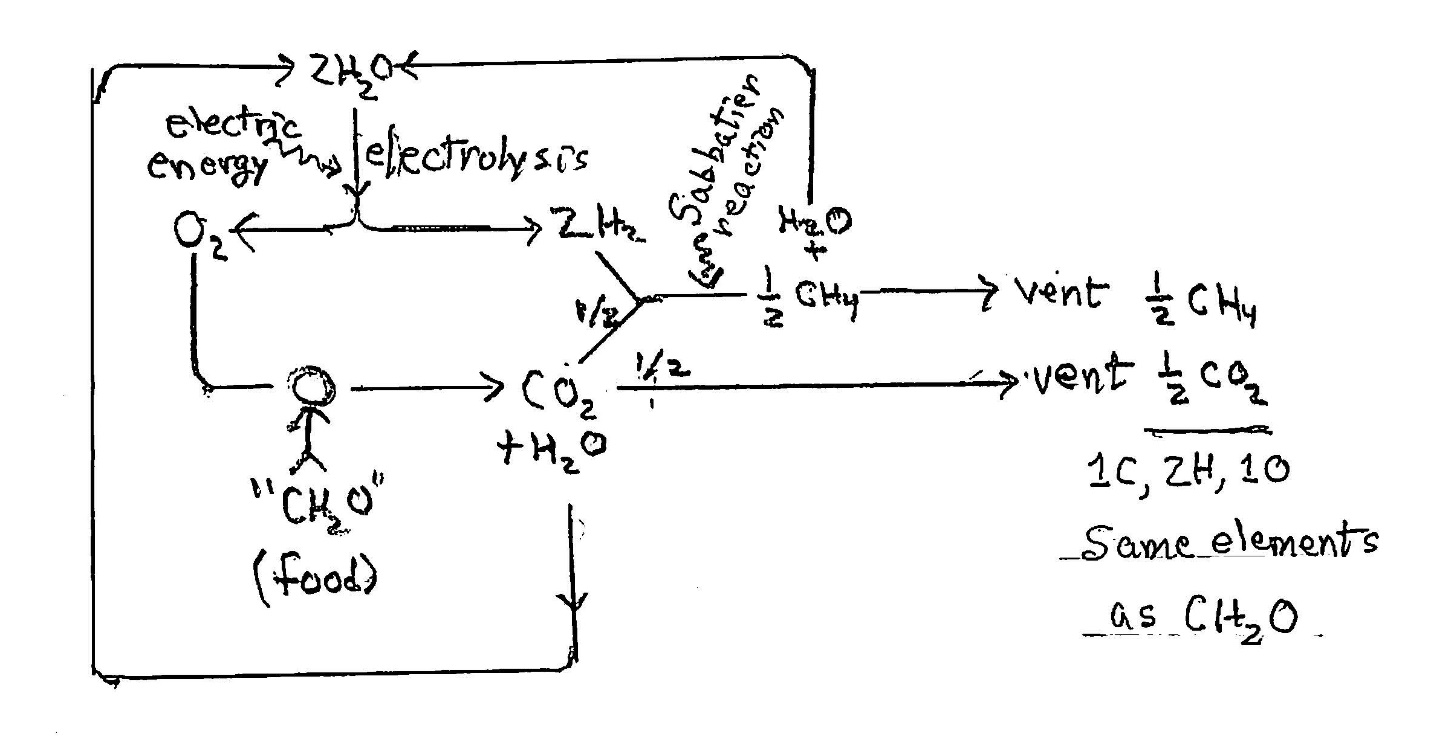

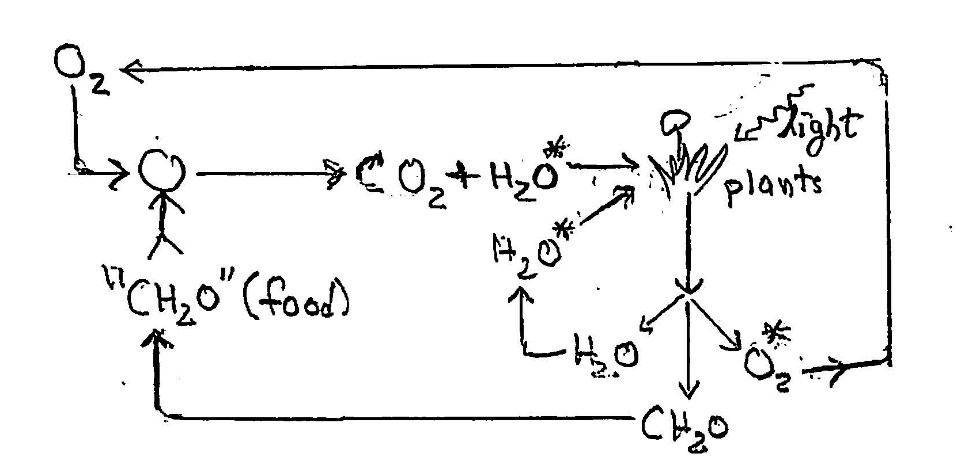

Habitability 3.0 is the ability to support us, even allowing for all our technological contrivances of mining, power generation, air conditioning, chemical transformations, and such. We evolved on a planet (or moon) like no other we’ve ever seen or visited, so we have special needs – water, oxygen, equal temperatures, etc. Toward the end of this book I use the example of Mars, the only world other than Earth that is likely to ever be considered. I provide details of some technological workarounds for numerous lacks of resources (e.g., water, O2, equable temperatures, soil and nutrients for growing our food, on Mars) and the presence of what we might call generically “nasties” (high UV or cosmic ray fluxes…).

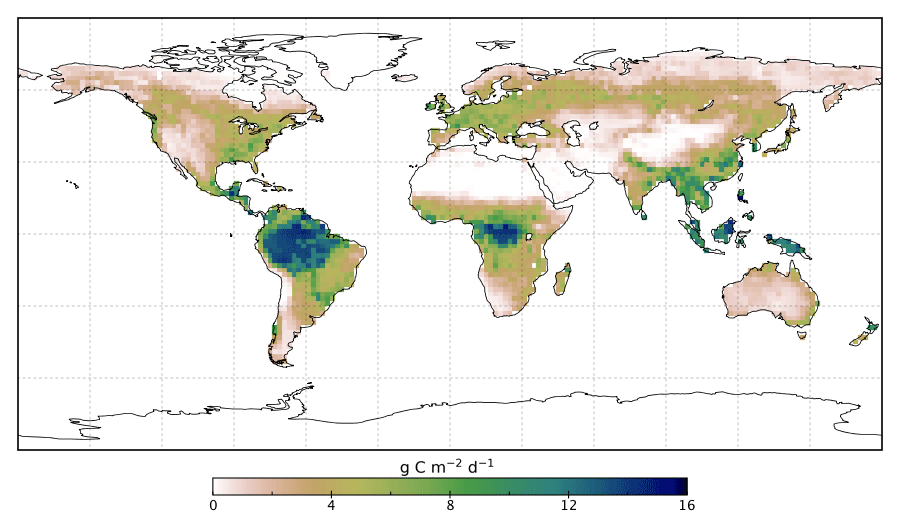

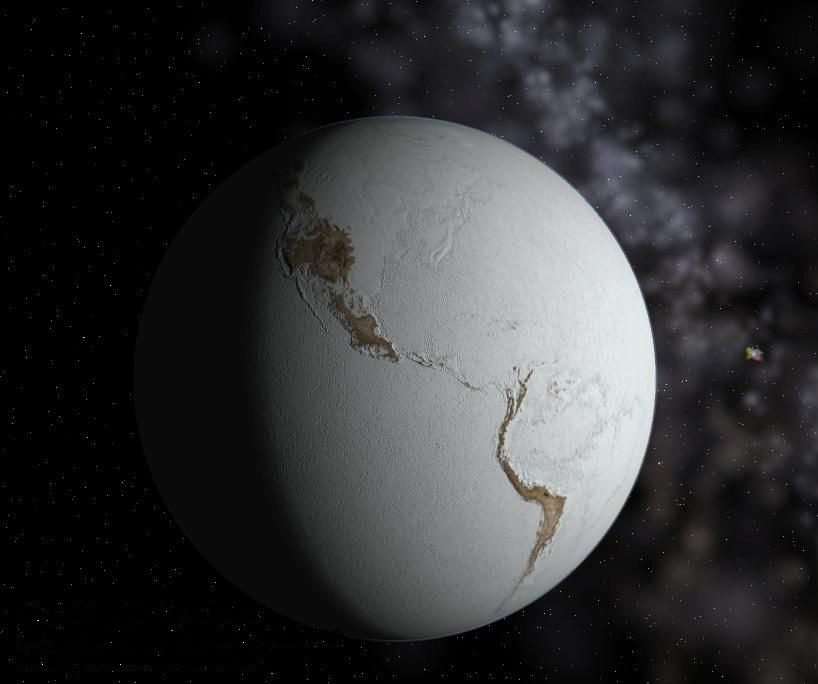

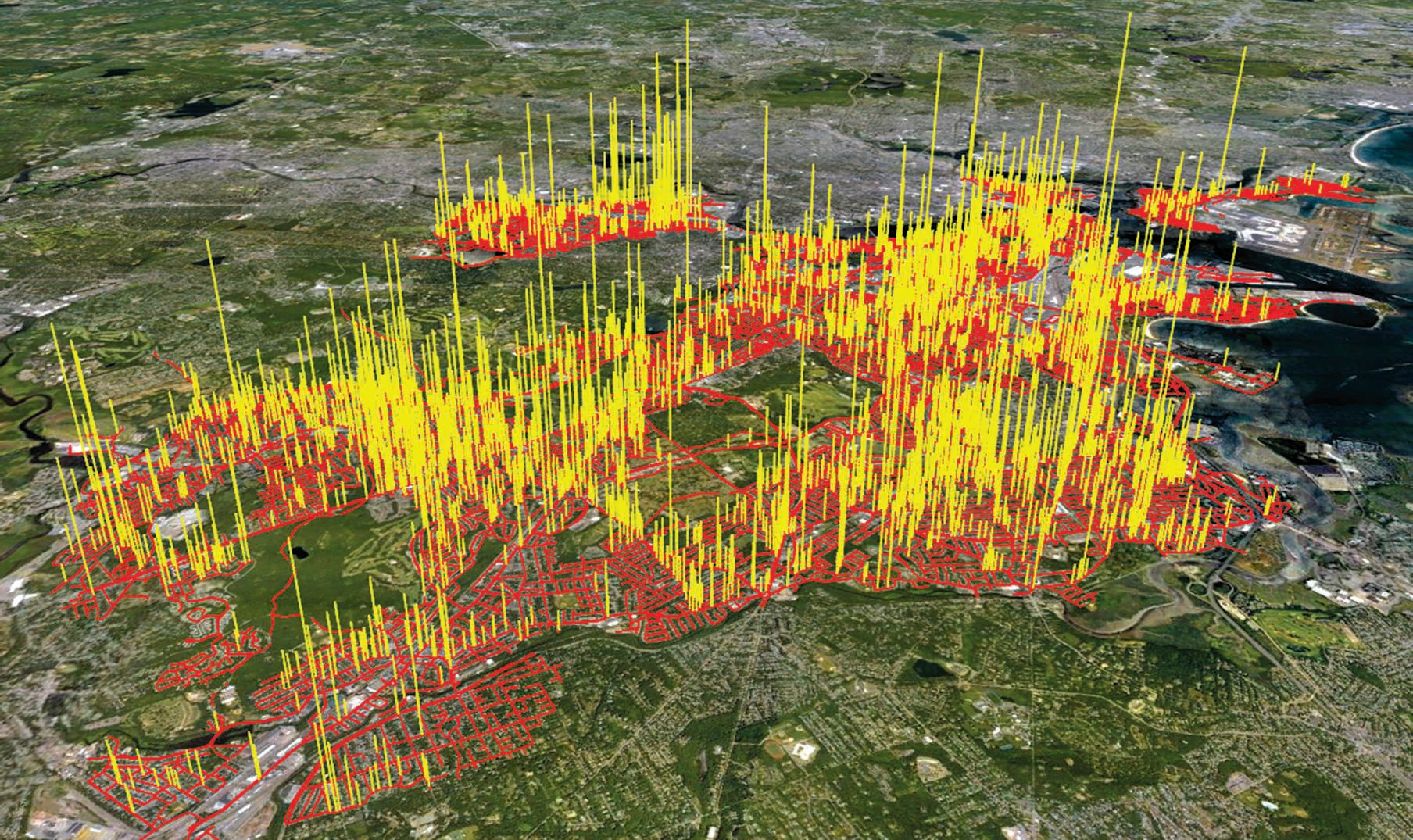

Habitability has at least three dimensions, of space, time, and type of life form. Re space: not all of a planet is habitable, at least, not for all kinds of life. (Ambrose Bierce once quipped that mankind “infests the whole habitable Earth, and Canada.”) In fact, spatial patterns of habitability are very fine-grained and can be of intricate topology. Consider a small field extending to soil depths and to the air space above. A small part of the soil is habitable by microbe X, another part (perhaps with some overlap) by microbe Y, and no microbe actually inhabits the air (see Denny, Air and Water, for an interesting discussion). Passing through, as by microbes blown across an area, does not count as inhabiting a location; the life form must be able to sustain its population with process of growth and of reproduction to replace lost members. The parts that are habitable for any life form tend also to be many spatial domains that may have exquisitely complicated connectivity. Imagine that we could do computed tomography through soil to measure (a wild idea for sensors) where life form X might live; the image would have sheaths, bubbles, tori, and other patterns. Right, not inside that buried rock, so warp the habitable geometry around that rock but not in it; not in those clay lenses; not in that anaerobic pocket; and so on. We humans inhabit surface areas fleetingly at each place. The very same geographic location may have been uninhabitable by any chose life form at various times, certainly on geologic scales (e.g., Ice Ages). Even much of Venus in a coarse-grained view was likely habitable until as recently at 700 million years ago. So, to declare an area habitable we need specify the place, with its quasi-fractal geometry, the time, and the life form. We might be accommodating and state that a larger place is habitable for life form Z over a short or long time interval, provided that the life form can migrate around to access the specific habitable places that blink in and out during the time interval.

Sketch of Snowball Earth Neethis Wikipedia Commons

There is another obvious qualifier, or, really, more of an expansion to the definition of habitability for a given life form. A location may be potentially habitable by a given life form but not inhabited by that life form at a given instant of time or time interval. Ecologists distinguish the potential niche that could be filled by a life form from the realized niche where the form currently resides. New Mexico State University astronomer Jason Jaciewicz noted, “If someone on another planet visited Earth 700Myr ago, when it was covered in ice, s/he would have kept on going. Habitability is not continuously apparent. He/she/it/?? could have concluded that Earth is not habitable – not even “mostly harmless” as in the devilishly humorous Hitchhiker’s Guide to the Galaxy. The thread of habitability was nearly broken then and at several mass extinctions that offer enormous insight about our planet and others, as I bring in later here. Geological and chemical relics of now-severed habitability appear on Venus, in plausible models. Our own Earth apparently froze over, or nearly so, several times.

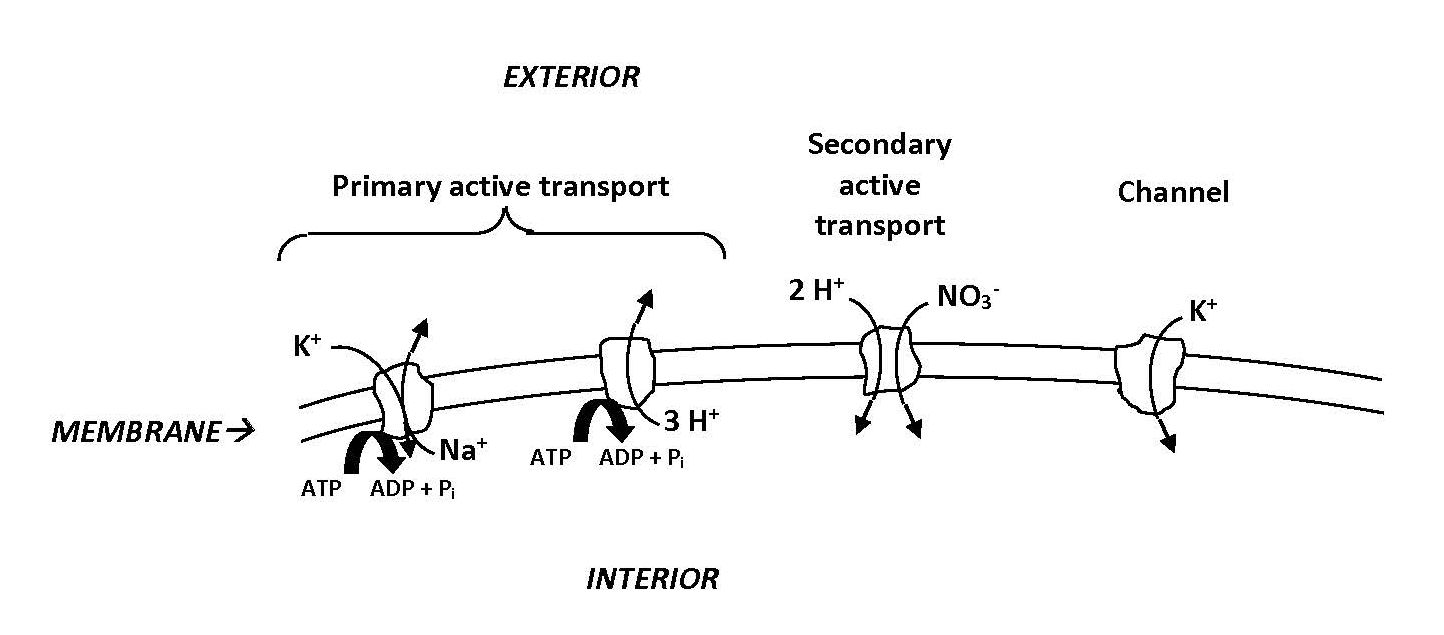

Co-dependence: There is a further, biotic dimension of habitability: the ability of life form A to maintain a population at a location and time may depend upon the presence OR absence of (many) other life forms. A case in the large is the elimination of most anaerobic bacteria in the surface water of the ocean when cyanobacteria evolved oxygenic photosynthesis. For the bacteria called obligate anaerobes, oxygen at quite small concentrations is fully inhibitory of their growth or even lethal. Conversely, some life forms require other life forms to exist. Animals on Earth require plants as food and microbes as sources of vitamin B12. Fungi require plants or animals as “food” sources. Most flowering plants require pollinators rather than wind.

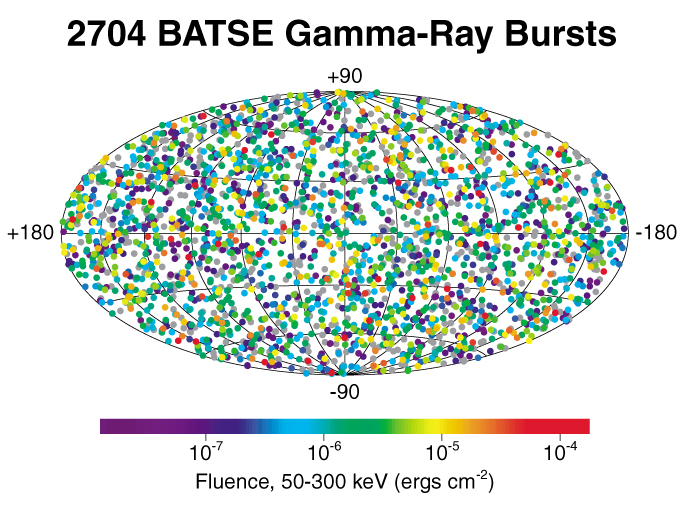

In the common view, all we may ask to declare a planet or a place to be habitable is that significant parts of it have life forms that we’re willing to count. With that proviso, we can then look at the physical, chemical, geological, and astronomical conditions that might support life. Delving into terms used in mathematical physics, we need attend to the boundary conditions in time and space: What is the lifetime of the star that is “useful” to life, within bounds of its output that warms a planet and refrains from unsettling outbursts? What defines a “friendly” neighborhood with the Goldilocks amount of local asteroids that deliver water but become infrequent destroyers, and with a decided lack of new supernovae, gamma-ray bursts, and such? Remember that all planets start out in a stellar nebula with asteroids and planetesimals as often-threatening neighbors. Most stars appear to be binaries, with great consequences for illuminating a planet and keeping its orbit stable.

A note about “we:” I use that ubiquitous pronoun with two meanings, each fairly clear in context. By “we” I often mean you, the reader, and I. Other times it’s clear that I intend to denote the whole scientific community, of which I’m a small part; to avoid nosism, I use “I” when it’s my personal interpretation of a topic. Science is a worldwide, over-all-time effort to understand the world under the premise that its structures and activities all arise from universal laws of physics, which determines chemistry, and both of which determine biology, with much chance mixed in at junctures (lucky coalescence of conditions for the first living cells, for example), and chance that we can describe in numerous cases to exquisitely degrees – look at hugely successful statistical mechanics. So, we scientists all work to share our understandings from pieces of the puzzle. “Art is I, science is we,” it was once said. Science and scientists have stories to tell. We need to tell them, as Robert Krulwich implored all the graduates at Caltech’s commencement in 2008; Lou Ellen’s and my son, David, was one of those graduates. This book is my bit, my set of stories from a career that happily crossed a range of sciences.

A very brief history of speculations about habitable planets

The history of studies of habitability is long but not too long. We now know that in our Solar System only Earth is habitable by visible life. We’re still speculating about other places. Was Mars once habitable, if only by bacteria? We’ll get to that topic. Ditto for Venus, perhaps with more evolved life until about 700 million years ago (no Perelandra now). We humans have been speculating about extraterrestrial life for ages, starting from very limited bases. Let’s put aside short fables of the ancients about life on the Moon or celestial objects not even know to be planets in ancient times. Some notable stabs at evidence, either on plausible or implausible bases, include Percival Lowell’s look at Mars with a decent telescope that could image what he took to be canals on Mars. Evidence of Earth’s long-term habitability itself is only recent. Fossils of multicellular life – mastodons, dinosaurs, Eryops, name them – were initially interpreted as creation, or as evolution in an environment largely similar to the current environment (clearly not so). No one asked what created and maintained those conditions such as breathable air and available water. Over a couple of centuries we humans discovered life that was yet more different, going back to the cryptic bacteria of billions of years ago. There are some credible bacterial fossils but even better evidence comes from chemical traces that had to come from bacterial life, such as the steranes. By now, we have a wealth of information about who was here through 3.8 billion of the 4.6 billion years of life on Earth and what environment they faced as temperatures and the chemical milieu.

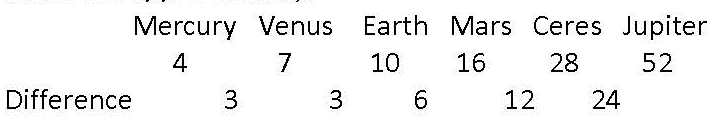

Back to speculation: At a purely statistical level in 1961, Frank Drake posited his famous equation for the likely number of planets in our galaxy harboring intelligent life. It’s the product of a cascade of probabilities:

Average rate of star formation in our galaxy,

Multiplied by the fraction of stars having planets,

Multiplied by The average number of planets per star that can support life,

…Multiplied by several more factors

The third factor is largely my focus. Given a planet, is it habitable? There are many, many physical, chemical, geological, orbital, and astronomical factors that truly mostly prohibit habitability. Vaclav Smil addressed the topic in his 2002 book, Earth’s Biosphere: evolution, dynamics, and change (MIT Press). Many others have addressed additional factors required for life to communicate over vast interstellar of intergalactic distances. At the end, I offer a few comments on the topic.

Multiplied by the enormous number of planets, perhaps 100 billion in the just our Milky Way galaxy, the probability of life is again very high. However, living planets are likely to be mind-bogglingly far apart. The closest Earth-like planet found to date is 1,400 light-years away, making for a pretty dull conversation of 2,800-year exchanges of dialogue, even if that almost-Earth supports intelligent life. More likely, the nearest planet with intelligent life is much farther away. We’re extremely unlikely to be contacted, which is to be grateful for, given the history on Earth of contacts between cultures of very different levels of technology (it ends badly for the culture of lower technology). Nonetheless, it is exhilarating to look in detail at what it took to make life on Earth, the confluence of many events of low probability.

Taking it to a conclusion that many people wish for, are any of the planets other than Earth likely prospects for visiting on foot (clad in space boots) or even colonizing? What might it take to get there safely in due time? Given that transporting people and matter is very costly in money and energy, How can a self-sustaining ecosystem be set up using local materials and energy resources? Our physiology and psychology clamp on a lot of constraints. If a colony is to perpetuate itself, reproduction is needed but faces real challenges from the genetics, particularly from inbreeding in a small population. We humans may have barely made it through some climatic drama; we’re still not very genetically diverse for a population of our massive size.

We now have much more material for our speculations – and for the insight they may afford us for our prospects here on good old Earth. Astronomers and astrobiologists have been spotting many exoplanets (outside our Solar System) with some remarkable similarities to the Earth. However, as Yogi Berra’s son once said, when asked about how he resembled his father, “Our similarities are very different.” Please read on.

The search for habitable planets… and for sentient life

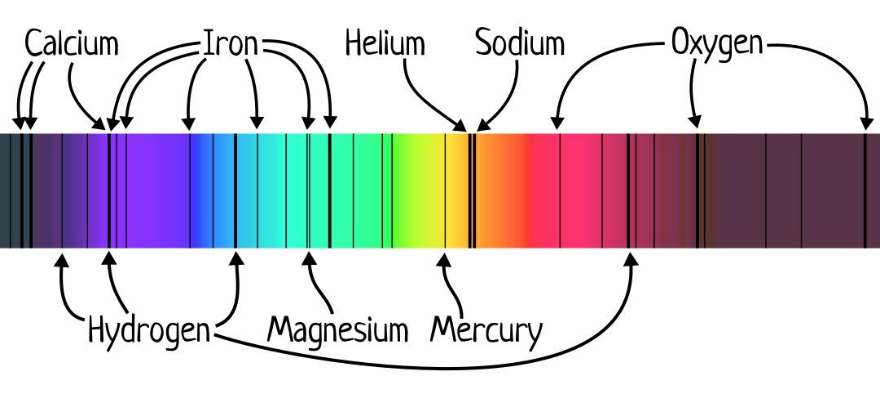

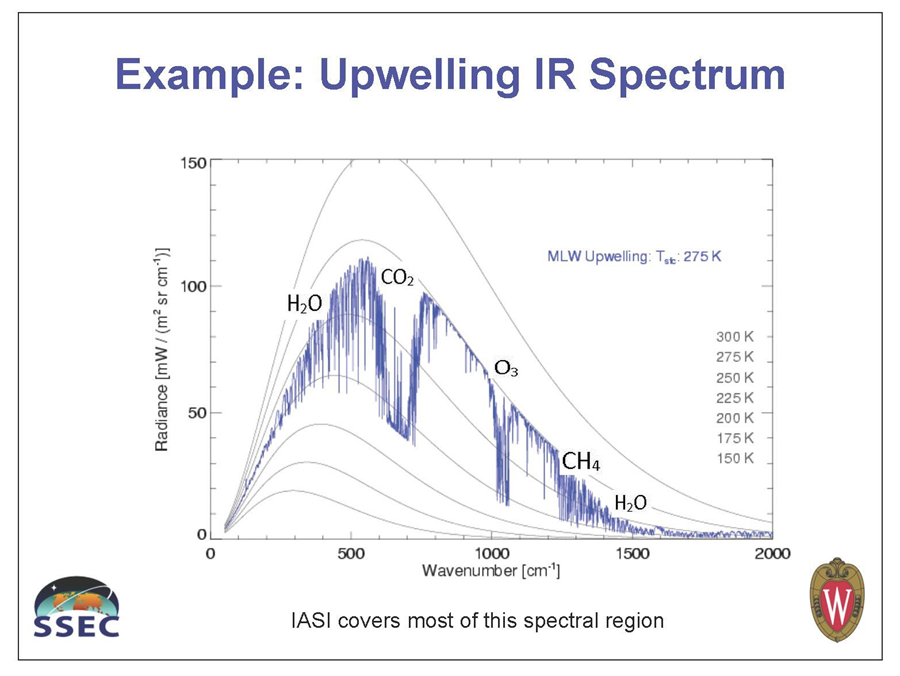

Before we look at the amazing set of conditions for the habitability of a planet we may look in on the search for potentially habitable planets. This has become a passion among astronomers and among people who call their discipline astrobiology. More than a century back, the possibility of life on Mars was proposed enthusiastically by Sir Percival Lowell, and others took the cue. Recent unmanned missions to Mars have searched for life, at least as microbes. No luck, so far. A flashback in time provides evidence that Venus once could have supported life. More recently, the search moved farther out, to planets of distant stellar systems. Astronomers have had great success in detecting these planets, particularly with the Kepler telescope and its successors. Among a number of methods of detection (we’ll get to the range of methods near the end), they may use the tiny fraction of light loss as the planet partially occults the central star. They can infer the orbits and surface temperatures of these exoplanets and may ultimately measure the constituents of the atmosphere on some of them. Both astronomers and astrobiologists then focus on a habitable zone for a planet, defined by stellar and orbital parameters that should maintain the planet at a temperature constantly in the range amenable to life. Does an equable temperature range alone make a planet habitable? No one claims that, as they cite the need for a medium such as liquid water (or, as I argue later, implausibly, that it might be ammonia). There are some novel ways of going deeper into exoplanet surface conditions now. With luck, we can detect the absorption lines of “nice” chemical compounds in the atmosphere of a planet; those of promise include oxygen (likely very rare to be found), carbon dioxide, and methane (made geochemically, even on Earth, but more so by living microbes). The list of candidate planets remains short, currently at zero in my take.

Closer to home, astrobiologists wish to get more information about Europa, a moon of Jupiter, Enceladus, a moon of Saturn, and several others. The medium of life, water, might be liquid on these bodies, with flexing of rock by the tidal pull of the giant neighbor raising temperatures raised above the very low values set purely by radiative balance. Flexing or volcanic heating on various bodies could also be a source of thermal energy, which is useless as metabolic energy. Energy has to flow from a ‘source’ to a ‘sink’ to do useful work, particularly to run chemical reactions in the metabolism of an organism, enabling growth, maintenance, and mobility. Energy that doesn’t flow just sits there, such as warm water over equally warm water –no overturning, no other motion. On Earth, radiant energy flowing from the Sun to photosynthetic reaction centers in green plants, algae, etc. provides this energy for life. Not all energy flows can do significant work, however; only the fraction termed free energy can do work. Sadi Carnot kick-started our knowledge of free energy in 1824 (in appreciation, the French named a street for him in the city of Aigues Mortes; good on ya’, France). There’s a lot of free energy flow in sunlight reaching photosynthetic organisms on Earth. Not so for low-temperature heat flows in flexing moons. There are ways to capture a ludicrously tiny fraction of this ‘geothermal’ (selenothermal?) energy, such as with a Stirling engine, but organisms don’t seem to be able to make one.

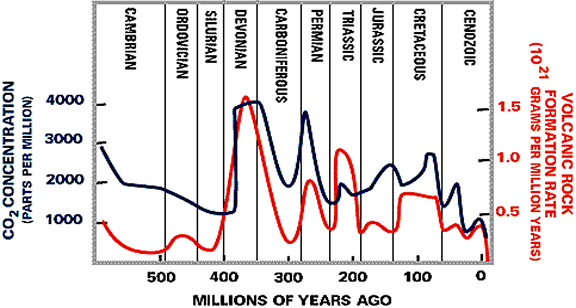

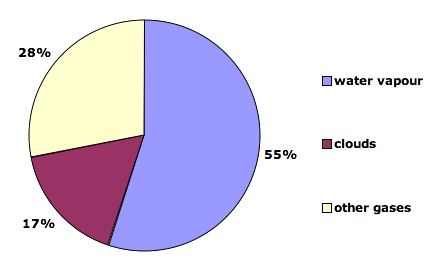

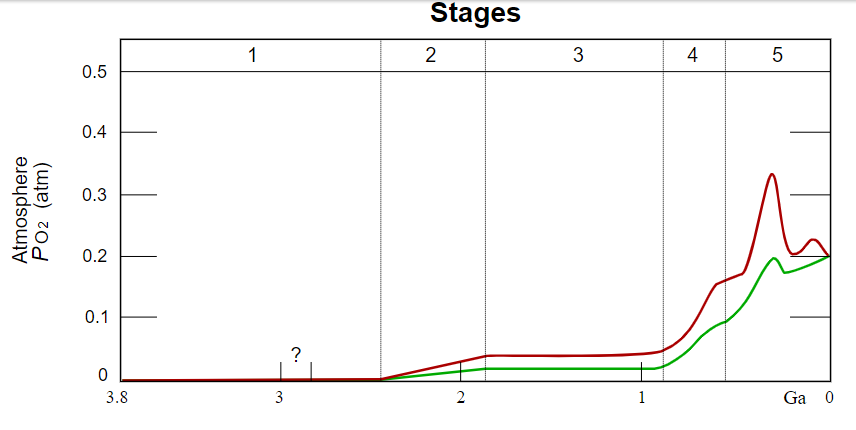

There’s much more to the story when we consider other factors of biology, chemistry, and physics. Astrobiologists know that certain chemical compounds in a planet’s atmosphere can signal the presence of life, but presence isn’t enough. Chemical elements have to be recycled from losses to erosion to keep organisms supplied – tectonic activity, with all its hazards of volcanoes and earthquakes, may be a necessity. For building blocks of bodies carbon appears necessary, in chemically reduced form (sorry, Mars, with all your oxidized carbon as carbon dioxide). To run critical electron-transport reactions the transition metal elements appear to be equally necessary, kept at the surface, not all sequestered in the planet’s core. Also, any atmosphere has a greenhouse effect, but the warming can go terribly wrong in a short time. Earth almost lost its life when early cyanobacterial life liberated oxygen to oxidize methane to carbon dioxide, almost freezing the Earth solid – more on that, later. Venus had the opposite experience, baking away any chance for life, though not the fault of any life on it.

Mostly, Devil’s Advocate

Spoiler alert? I conclude that life is extremely unlikely to be found; it may exist, but at unimaginably great distances from us. My synthesis is in the hope of raising the bar in the discussion. Track my arguments if you wish and find the loopholes – the chase is fun. Nonetheless, I offer that he probability of life in the rest of the Universe is very high. The probability that it is very, very far from us is also very high. Reasonable estimates of the probability of any planet being habitable, or, more so, of harboring life, and even more so, of harboring intelligent life, are in the realm of very small numbers. Actually, what I find compelling is not the prospect of life elsewhere. It is the understanding that the conditions for life here on Earth were so exquisitely unlikely, and that we can use the understanding to help preserve those conditions against what are mostly our own activities.

For the full story, we have a lot of topics to delve into:

- The excitement a few years ago over Proxima Centauri b, with a warm planet

- Other exoplanets, now found in the tens of thousands with virtuoso use of telescopes on Earth and in orbit. Many may be warm enough. How many meet many other conditions for life?

- SETI, the search for extraterrestrial intelligence – upping the criteria from microbes to something like us

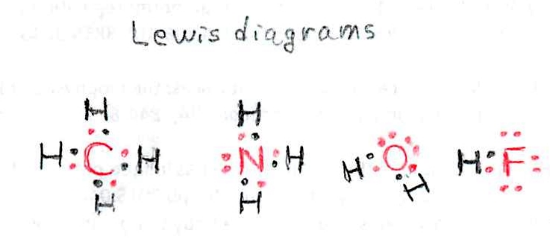

- The unique chemistry of life: water carbon, and a bunch of elements present by luck

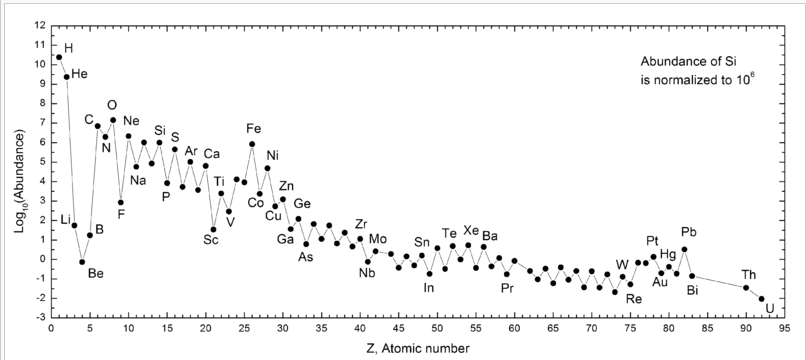

- Nucleosynthesis, or how those elements got here while sparing us the cataclysmic events needed – That’s the help from supernovae or neutron-star mergers but none that were recent!

- The right orbit, the right star, the Moon’s help, Jupiter’s help

- The right number of impacts: being on a rocky planet while asteroids from the icy world deliver our water but don’t do the Chicxulub extinction number on us too frequently

- Volcanoes, mountain building, earthquakes, and other accompaniments to the big plus side of plate tectonics in making dry land, renewing soils, etc.

- How Venus may have made it as habitable, 2/3 of the way toward the present

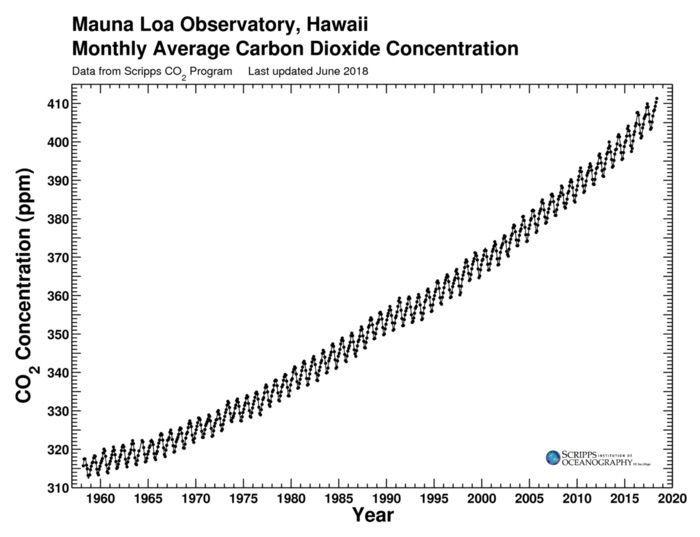

- The greenhouse effect and its several catastrophic excursions that almost did life in

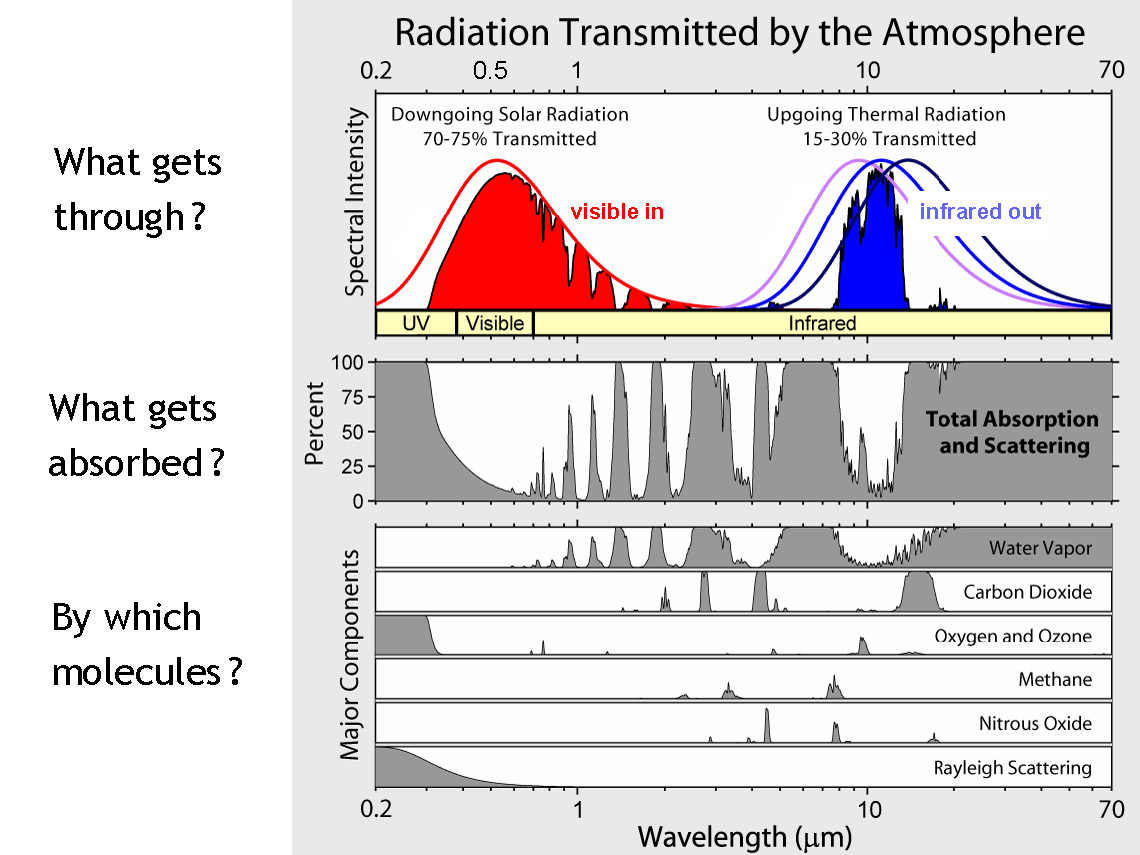

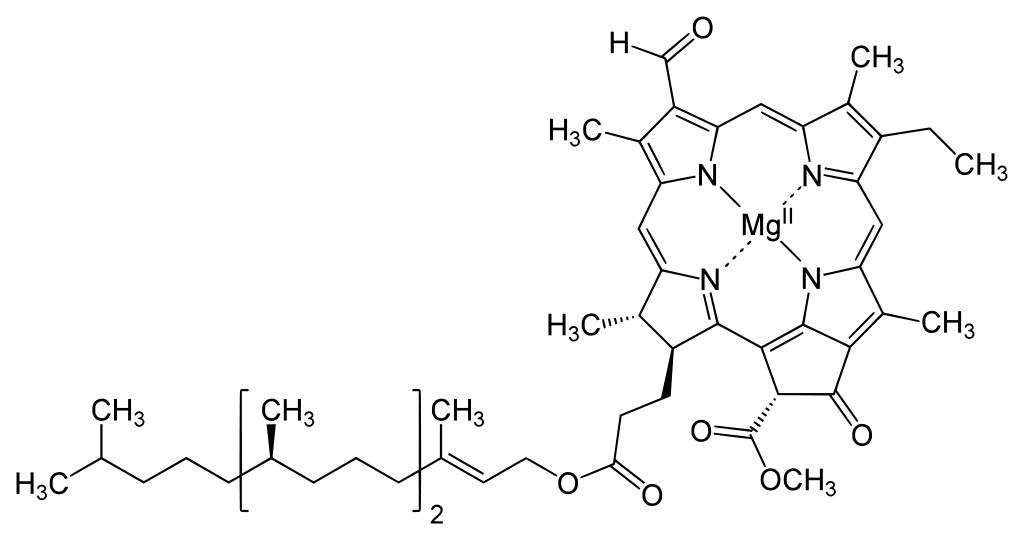

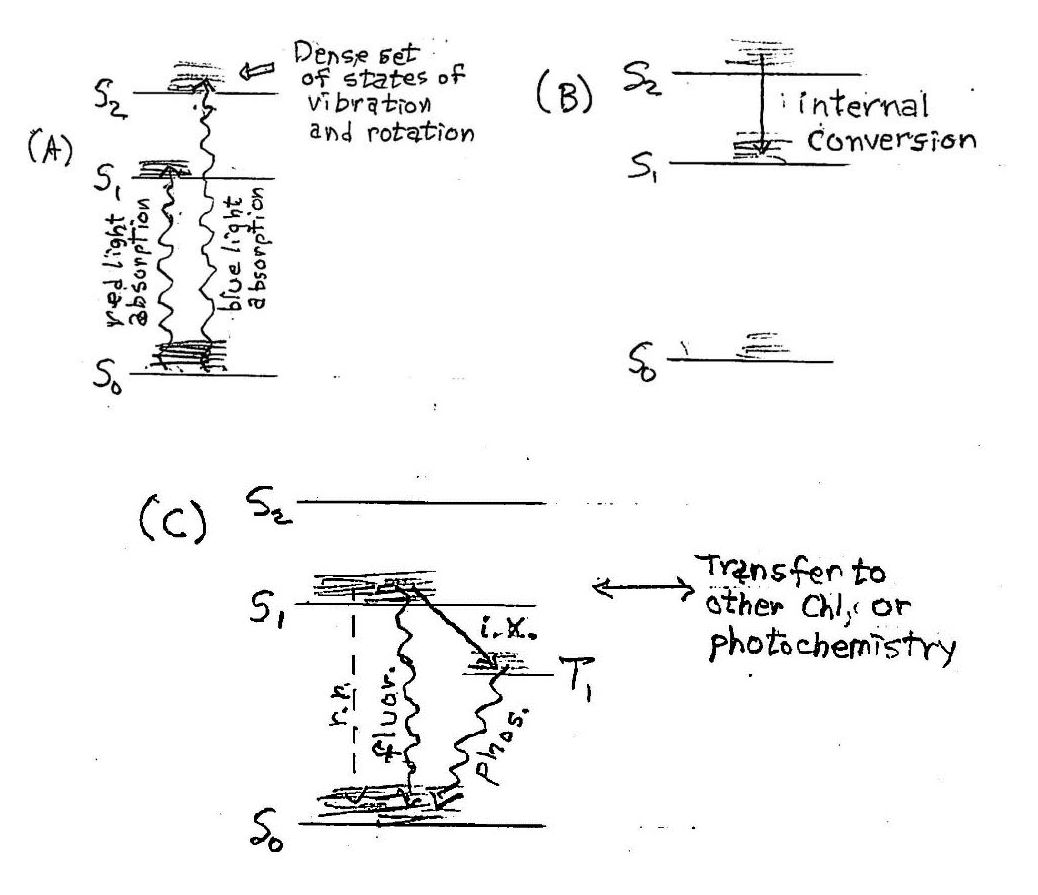

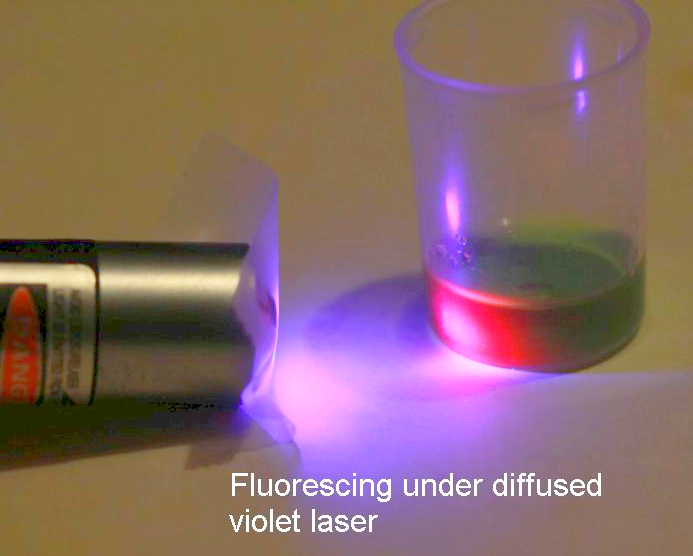

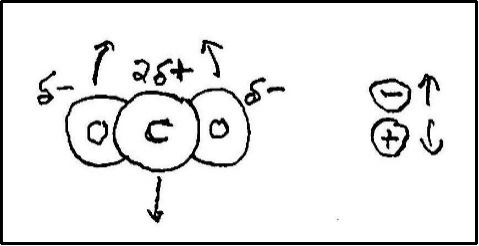

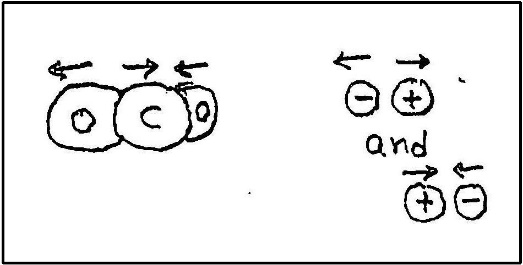

- Photosynthesis using visible and near-visible radiation is a sine qua non; heat provides no energy for metabolism

- Plants: We need them but what have they done to the Earth in the past?

- Terrestrial life evolving fast enough to get to sentient life before the Sun cooks us – a shout out to Elon Musk

- OK, planet X is habitable; can we get there? Would we relish it?

- The rocket equation: why it’s so hard to travel fast and far

- In the words of Will Durant, civilization exists (only) by geological consent, subject to change without notice: Earth’s tenuous habitability with its mass extinctions

- … and more

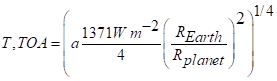

What astrobiologists want first and foremost: a reasonable temperature on the planet

Getting the temperature right. Simply, life on Earth needs temperatures that keep water liquid but not too hot, at least part of the time. Certainly, there are unfavorable excursions. Siberian air temperatures reach -60°C; soils in hot deserts reach 70-plus °C; Prismatic Hot Spring in Yellowstone National Park hits 89°C at its center that’s colonized by colorful bacteria. Deep in the ocean at the spreading ridges are black smokers, vents of mineral-laden water. Bacteria there support other organisms such as unusual clams and shrimp while tolerating temperatures of over 120°C… but temper that with the effects of enormous hydrostatic pressure that both keeps water from boiling and stabilizes all those critical biochemicals such as proteins against thermal denaturation. We then expect life to thrive – that is, both survive and be metabolically active – somewhere between -2°C in cold seawater and hot surfaces pools such as at Yellowstone National Park at perhaps 90°C. (Those heat-tolerant organisms gave us a heat-resistant DNA replicase that enables so much of modern biology via the PCR method. It’s how we know, among other things, that we modern humans have some Neanderthal and Denisovan genes in us, as well as viral genes from way back. We’ve been genetically engineered by nature.)

These temperature ranges far exceed our human comfort zones. At the most extreme temperatures, let’s not expect life to look anything like us. Estimating the temperature regimes for organisms on distant planets is strongly limited by our lack of knowledge of what geological structures exist on them and what organisms might live there. Here on Earth organisms find or create local microenvironments where conditions may depart radically from the gross air or soil temperatures. Lizards and snakes bask in the sun or retreat to cool crevices. (There’s little such thermal differentiation in water; water conducts heat well, especially heat coming from below with convection that mixes it, averaging out most local environments). We humans can use clothing to great effect, with some surprising strategies of deployment (see an Appendix, “Keeping cool with long sleeves and pants in the sun”). Plants transpire water, mostly to trade for CO2 for photosynthesis, but also cooling their leaves. Subtleties can still escape us. Endothermic (cold-blooded) reptiles thrived in the “Saurian sauna” on Earth in the Silurian age, when the surface temperature appears to have averaged about 35°C. There was precious little space for warm-blooded animals to shelter. On the other side, the cold side: Recently it was found that modern-day moose overwinter badly in areas cleared of branches by past forest fires; The ground and air cool too much by thermal radiation to space when few intact branches block the optical path from ground to air. In short, for planets other than Earth we can only make estimates of the gross environment – mean air or soil temperatures over large areas. Life forms, if any exist, have to find their own coping mechanisms to bring their own internal temperatures in the survival zone.

Grand Prismatic Spring, Yellowstone NP

Outside the equable temperature limits for organisms to be active, some organisms can survive sometimes (note the double “some”) by going inactive, as by hibernating. Others create resistant forms such as spores. Humans make some striking acclimations. People live or at least visit areas to collect valuable salt in the Danakil Depression (above; brilliant-ethiopia.com) where air temperature routinely makes excursions to 50°C, way above our body’s core temperature of a nominal 37°C. We need water to evaporate from our lungs, noses, mouths to lose heat in these conditions. The great story of coping with temperature extremes is written large in the literature of physiology. A good presentation with mechanistic understanding is in the book, Environmental Physics, by G. S. Campbell and J. N. Norman.

In 2003 my colleague, Hormoz BassiriRad, and I put together a broad perspective on how organisms – plants, animals, microbes, … – withstand extreme episodes of adverse conditions of temperature, water status, and other environmental conditions. We went from the physiology of individuals to the ecological and evolutionary effects. We brought in the spectrum or the statistical distribution of adverse conditions; how often is often and for what degree of extremity? A similar perspective is worth taking on any study of life elsewhere; the environment is always changing, and surviving 99% of the time is not good enough.

With a diversity of physiological, behavioral, and developmental acclimations enabling organisms to survive and prosper in environments that may reach temperatures that are extreme in our view, it’s not possible to set hard-and-fast limits for the livable range, even for familiar Earthly life. That said, there are regimes of temperature in space and time that are real deal-breakers for life and many more regimes that seem to militate against abundant life and, especially, multicellular life and the subset of life that’s intelligent. Temperatures should not stay extreme for times longer than the slowest generation cycle of organisms.

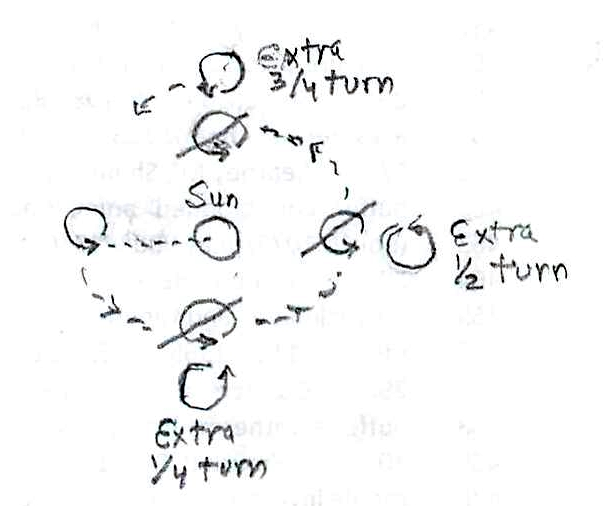

Orbital physics enters here. À la the Drake equation, a planet can’t be too near or too far from its star to be permanently cold or hot. That’s a given. It gets far more complex and interesting as we can detail later. There are planetary orbits that endow a planet with a good mean temperature but make it too cold or too hot almost everywhere or for too long a portion of that planet’s year. A planet tidally locked to face the star is very hot on that face and very cold on the opposite face. That leads to the atmosphere, if it exists at all, condensing out as ices on the cold side – at least, those chemical components useful for life; skip argon, for example. Witness Mercury in its 3:2 synchrony with the Sun; the planet rotates to expose different areas to sunlight, but areas stay exposed for 1/2 of a year each. Heat can’t be conducted through many km of solid rock to even things out.

There’s much, much more demanded of a planet for habitability – and demanded also of its star, the stars near it (violent cataclysms to provide heavy elements but only before the stellar system condenses into star + planets), its possible neighboring planets, and a whole lot about the planet itself – its chemical constituents, tectonic activity, plain old size (a rather narrow range to keep water but not massive amounts of hydrogen), water depth, and more. We’ll get to all of these in the process of exploring habitability.

We’ll need a benign, long-lived star, a planet at the right distance from it, a rocky planet yet with significant water, a fine tuning of chemical elements in air, water, and rock, and quite a bit more. Let’s start with the central star.

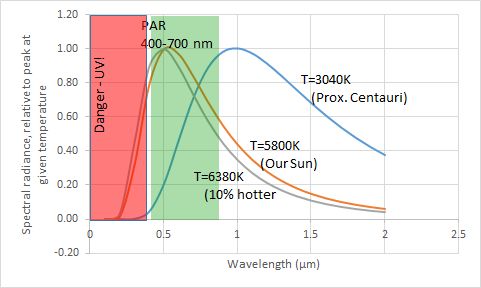

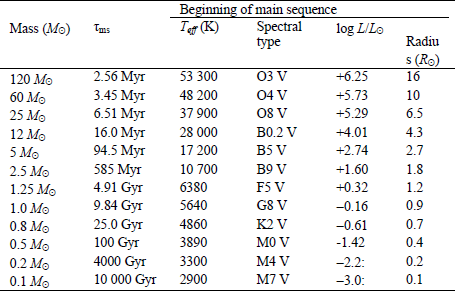

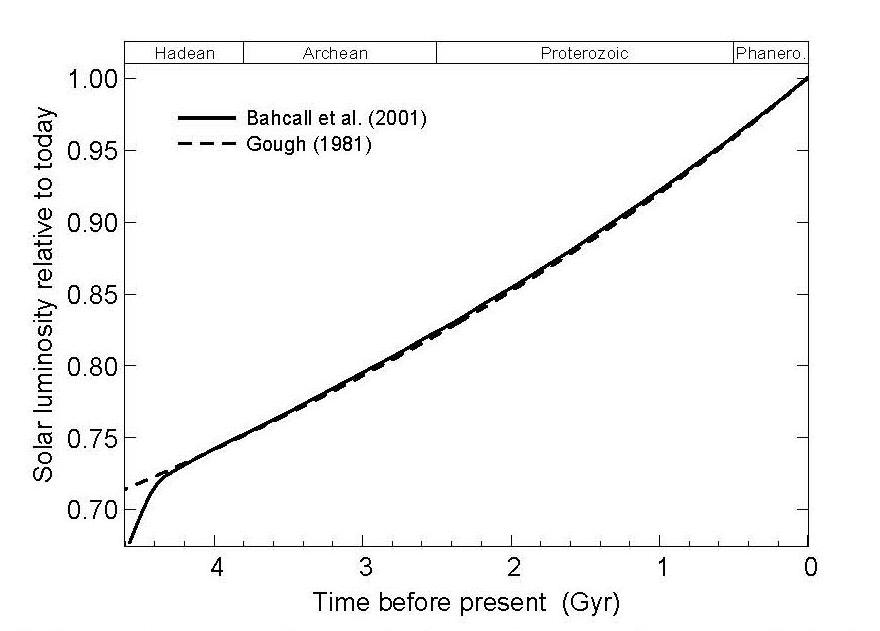

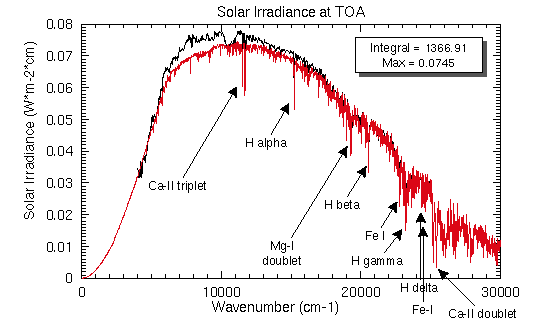

A star is a nuclear fusion reactor. Nuclear energy released is converted to tremendous kinetic energy of nuclei, gamma radiation, partly to the creation of the elusive neutrinos, with all but the neutrinos “degrading” to heat and the accompanying electromagnetic radiation (light and light’s kin, much as a hot iron bar emits much light). Critical for the potential for life near it are its energy output (temperature, size) and longevity. The star’s mass is its central attribute. Its mass determines its temperature, its lifetime, and the stability of its output against flares and worse. Stars around the mass of our Sun spend most of their lives on what astronomers call the Main Sequence, slowly changing in temperature and thus in power output as they age. The changes have had striking consequences for life on Earth, to be covered later. Stars provide nearby planets with electromagnetic radiation, distributed among types we call infrared (long wavelengths, low energy), visible light, and ultraviolet (UV). For stars of modest size, for much of their lives their output is strongly in the visible band, with modest UV. Hot stars are more problematic about UV. Even a 10% increase in stellar temperature boosts the UV to 18% of total energy, from 13%.

By the author

The useful measure of wavelength is micrometers (μm, millionths of a meter), and the similarly useful measure of temperature is absolute temperature, measured from absolute zero, in kelvin (symbol K; a span of 1 kelvin is also 1°C; Kelvin and Celsius scales just start from different bases). The visible band is critical as an energy input for living organisms, as we will explore; it’s not just handy for current life, but arguably for any form of life, based on the energetics of chemical reactions involving the equally necessary carbon compounds (with carbon not just an accident of life on Earth but a fundamental property of chemistry). More massive stars, which are hotter, put out too much genetically damaging UV, even when a planet is at a distance providing the right stellar energy for an equable temperature. They also live too short a life for lengthy biological evolution – hope for bacteria to evolve before the star’s end but not much more. Less massive stars can be comfy providers for life. They offer somewhat less visible light to a planet at a distance giving a good temperature. They’re long-livers, a positive attribute. There are some cool stars off the Main Sequence, such as red dwarfs as remnants of bigger stars, but they have several key problems that we’ll delve into – they flare disastrously, and a planet has to be so close it’s locked to have one side facing the star. Such was the end of hope for life for life on a planet around our nearest neighboring star, Proxima Centauri b…. which we know is small – how do we do so?

Life-giving radiation, part I

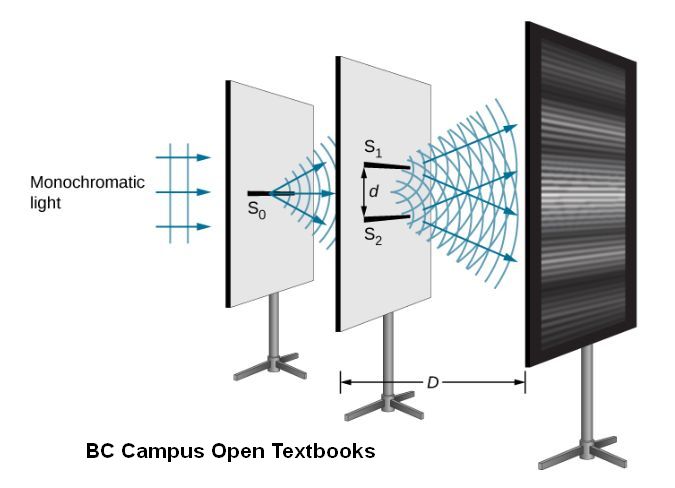

It’s an opportune moment to delve into the nature of electromagnetic radiation. Light and its heating equivalent were clear to the ancients. That light, and infrared, UV, radio waves, X-rays, and gamma rays are traveling waves of oscillating electrical and magnetic fields was not known until 1864. A number of physicists had been playing with large (macroscopic) items: magnets, wires, electrical currents, and their interactions. James Clerk Maxwell (left, as a young scientist) had formulated equations for all the electrical and magnetic phenomena… and realized that the mathematics allowed for a traveling wave as a solution of the combined equations. That solution had a predicted velocity that matched the estimates of the speed of light (which was first measured rather well in 1676 by Danish astronomer Ole Römer)! Voila!

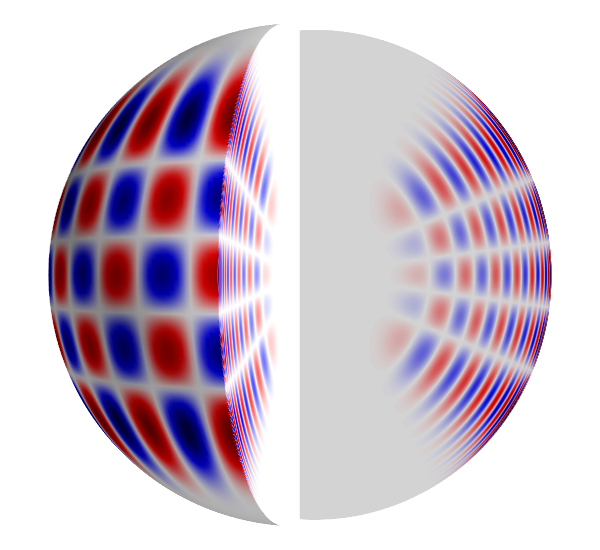

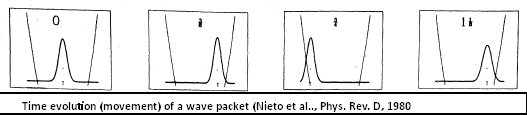

This description also jibed with the finding in 1801 by Thomas Young that light was comprised of waves, from their ability to interfere with each other (below) just as water waves and sound waves to when peaks and troughs meet and cancel each other.

Physicists then knew that electromagnetic waves were described by several quantities. Essential is their wavelength, λ (lambda), such as the famous yellow D line of incandescent sodium vapor, at 589 nanometers (0.589 micrometers or about 1/100th the diameter of a human hair). Their wavelength is conjugate to their frequency, ν (nu); and their polarization (in which plane is the electric field rising and falling… though the movement can also be in an ellipse) Just as for material waves such as sound, frequency, wavelength, and velocity are related as

![]()

For microwaves in our appliances with a wavelength of a bit over 12 cm, the frequency is 2.45 billion hertz (cycles per second) or 2.45 GHz. For the sodium D line ν is a remarkably high 509×1012 Hz or 509 terahertz, THz. There are other properties of light that become important in various contexts. Light has polarization, which is the orientation of its oscillating fields relative to its direction of motion. In groups of photons, the way we almost always see it, it also has a degree to coherence or consistent timing with its fellow photons. This is important for lasers, as well as for clever ways to measure the sizes of stars.

Light and other EM radiation transports energy through space. There’s an input of energy to generate radiation, which comes from the jiggling (regular acceleration) of charged particles, such as the movement of electrons up and down in a radio antenna. Thermal motion of charged particles in a body generates radiation. A hot body such as a heated iron bar in a blacksmith’s shop can generate radiation of sufficiently high frequency / short wavelength to be readily visible, as red to even whitish light. It’s long been known that a body at a given temperature emits radiation in a broad spectrum. Bodies of complex structure generate a smooth spectrum following well-established equations. The spectral distribution across all frequencies is a function only of the body’s temperature. The ideal case is the black body, capable of emitting radiation at all frequencies. The term “black” refers to the inverse case – a black body is also capable of absorbing all frequencies, by a principle of symmetry. Our own skin, bodies of water, most Earthly surfaces other than polished metals, and the Sun itself act very closely like black bodies.

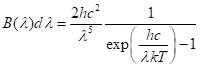

If you care for the details in math, the spectral distribution follows the equation

where B(λ)dλ is the increment in the total amount of radiation in a range of wavelengths between a given λ and an incremented wavelength λ+dλ; h is Planck’s constant and k is Botlzmann’s constant (more about both of these, later), and c is the speed of light. Temperature here is the absolute temperature measured from absolute zero, which is the starting level for energy measurements. The concept of absolute zero came from the study of gases. The unit is the kelvin (K). The formula here shows a maximum or peak at a wavelength that depends on temperature. For the Sun, the peak is at a wavelength of visible light, 500 nm. For a body at a room temperature of 20°C or 293 kelvin, it is 10.6 micrometers. The shape of the distribution of energy among wavelengths was presented earlier graphically.

A much simpler equation also captures the behavior of the total energy radiated by a black body, per unit time and per unit area:

![]()

Here, T is the absolute temperature, a concept that came from the study of gases (!), and σ is the universal Stefan-Boltzmann constant, independent of the nature of the body. It’s rather remarkable in stating that a doubling of temperature results in a 16-fold increase in radiation. Hot stars expend energy rapidly… and die fast.

While the amount of radiation may look dramatic at high temperatures, there are a couple of major points to note. First, it applies to all bodies, even those we don’t consider particularly hot. In fact, any body above absolute zero is giving off such thermal radiation, at a rate that’s determined by its temperature and diagnostic of that temperature (there’s also a modifier, the emissivity, which we’ll get to in the next paragraph). That fact is critical in explaining the energy budget of the Earth or any body illuminated by other bodies. Second, the total radiation rate is finite. That’s a problem? For classical physics, it was a problem. Physicists considered an arbitrary cavity, say, a cube, and looked at all the ways that waves could fit in that met conditions at the surface (having a zero amplitude there, like violin strings at their supports). There is no limit to the number of modes as one considers shorter and shorter wavelengths. If all the modes or frequencies could contribute equally to the total radiation in the cavity, the sum goes to infinity. This was the ultraviolet catastrophe of classical electromagnetic theory. No concept of classical physics could explain it away. The solution lay in the idea that light comes in discrete packets, or photons, each with a fixed energy. Radiation is quantized. All photons of a given wavelength are identical. Light is both a wave and a particle. In fact, in the full development of the theory of quantum mechanics over the decades, it became clear that everything is both a wave and a particle at the same time, with the wave character more apparent in some cases (diffraction of light… or of electrons or even atoms) and the particle character more apparent in others (molecules colliding with each other to generate gas pressure… but also light beams imparting kicks of momentum to lightweight objects).

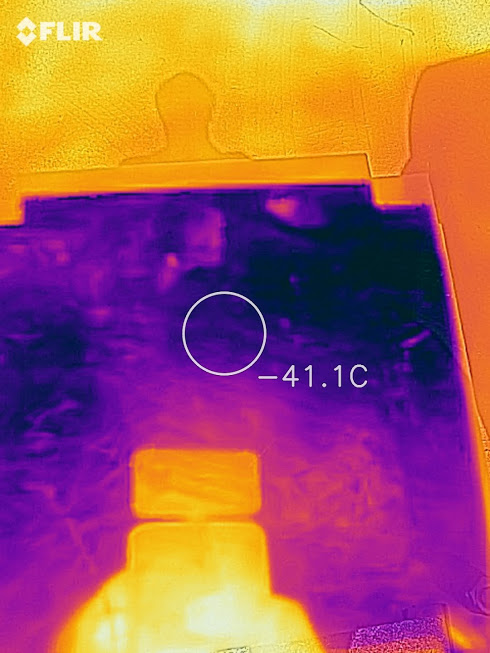

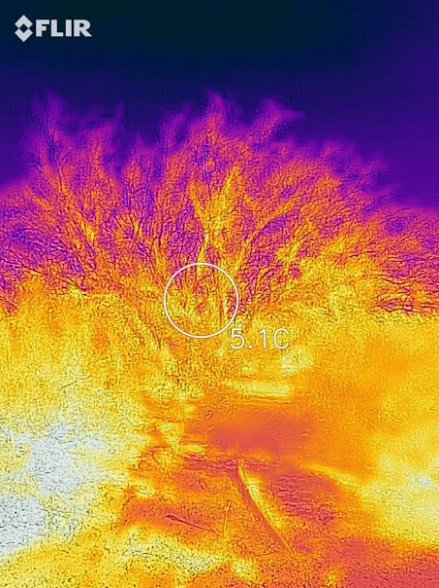

While stars emit light much as if they are perfect black bodies, there are deviations from the spectrum for familiar hot bodies. A tungsten filament in incandescent lamps of old emits less light than its temperature would indicate, and its spectrum doesn’t exactly follow the shape of a blackbody at a lower temperature. We say that tungsten and other shiny metal have emissivities – the ability to radiate the amount of radiation in a range of wavelengths like a blackbody – less than one, sometimes far less. The gross accounting is in modifying the earlier equation to I=εσT4, with ε being the average emissivity over all wavelengths. This is readily seen in an image in the thermal infrared region that includes a polished aluminum plate reflecting the cold sky (along with an image of a shrub against the cold sky on the same winter day:

By the author

Here the effect is the inverse: ability to absorb radiation is also proportional to the emissivity, from a physical principle called microscopic reversibility that we need not get into here. Emissivities less than unity figure only modestly in the energy balance of a planet. Most surfaces on a planet are chemically complex and consequently have many modes of absorbing energy. This gives them emissivities very close to one in the range of thermal infrared radiation– about 0.96-0.98 for vegetation and most soils, though as low as 0.6 in some narrow bands for Sahara Desert sands. Water has an emissivity of 0.96, ice, 0.97. The corrections from assuming e = 1.000 can be moderately important for detailed climate models. A 4% error in emissivity leads to a 1% error in radiative temperature on the absolute temperature scale.

I offer some more details and some history of the blackbody concept in an Appendix. And, in a later section, I’ll get into some details of how electromagnetic radiation interacts with molecules – how any molecule absorbs and emits radiation, based on how its electrons are deployed and how the radiative properties give us a planet’s energy balance, including prominently its greenhouse effect.

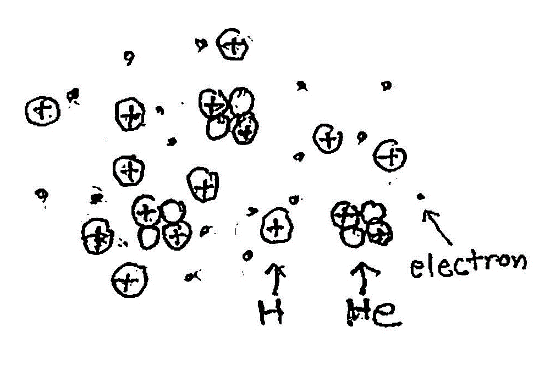

Exploring details: How stars work, particularly the “nice” stars

Stars form by accretion of gas, and perhaps some miscellany from a previous supernova or neutron star merger that created heavy elements. The Sun in its outer layers is about 73% hydrogen and 25% helium, with the atoms in most of its depth broken up into the bare nuclei and free electrons. The Sun’s original chemical composition was inherited from the interstellar medium out of which it formed. Originally it would have contained about 71.1% hydrogen, 27.4% helium, and 1.5% heavier elements.[51] The hydrogen and most of the helium in the Sun would have been produced by Big Bang nucleosynthesis in the first 20 minutes of the universe, and the heavier elements were produced by previous generations of stars before the Sun was formed, and spread into the interstellar medium during the final stages of stellar life and by events such as supernovae.[53] I have as a sidebar a quick introduction to how elements are composed of the nucleons protons and neutrons and of electrons.

The loss of gravitational energy as all the parts fall into each other’s gravity is balanced by the creation of heat. This is the process analogous to a bit of lead dropping a decent distance and thus getting a little hotter. Now magnify that heating enormously. Back in 1862 when no one knew that radioactivity and nuclear reactions occurred, William Thomson, later Lord Kelvin, attempted to use his formidable math and process-modeling skills to figure out the age of the Sun, based on its rate of energy loss. Assuming only an unspecified but not continued source as creating a molten Sun, he calculated an age of 20 million years for the Sun. Nice try, but it failed to match later-established ages of fossils and, of course, the reality of nuclear reactions. In itself that’s not an error in the process of doing science; making an error that would force you to discover new principles. It’s an error when you’re confronted with the reality of nuclear reactions to refuse to accept the new part of physics after it was solidly confirmed. Some theories convince those skeptical of them, others outlive them. Still, Kelvin starting the ball rolling to understand the energy course of the Sun. It illustrates the maxim of Francis Bacon from the 16th century, “Truth emerges more readily from error than from confusion.” Kudos for reducing the confusion… and for at least countering the idea held at his time that the Earth was infinitely old or very young (remember Bishop Ussher and 4004 BC).

The chemical composition of the Sun and other stars as being mostly hydrogen has a checkered history. Cecilia Payne-Gaposchkin documented this thoroughly in her 1925 Ph. D. thesis, as she became the first woman to get a doctorate in astronomy at Radcliffe College. Male astronomers dismissed her discovery, holding to the belief that stars resembled rocky planets, albeit much hotter. This was doubly odd, because Arthur Eddington had already hypothesized that the fusion of hydrogen to helium was the main energy source of the Sun. Admittedly, it took until 1929 for Friedrich Hund to discover the quantum tunneling mechanism that would allow protons to approach each other closely enough to fuse. It was not until 1929 that Robert Atkinson and Fritz Houtermans measured the mass deficit between 4 H atoms and one He atom to show that enormous energy could be released in fusion. Still, bad show, my fellow white males, in holding back your field for some years for the sake of male pride. Cecilia Payne-Gaposchkin finally got a professorship at Harvard at age 56.

As hydrogen and other elements accreted to form the Sun, all energy was substantially conserved; there was no gain or loss of energy (if we count the emitted light) nor had any significant fusion of hydrogen occurred. As accretion proceeds, a star of modest mass, like the proto-Sun, has barely started on its time-development or evolution on what we term the Main Sequence – the pattern of changes in temperature, brightness, and fusion of hydrogen fuel into helium and other chemical elements, basically all determined by the initial mass of the star. That pattern makes sense, given the knowledge of physics, particularly of nuclear fusion that we need dive into. We may start with the fundamental concepts of energy, in its diverse forms – gravitational, thermal, mechanical, elastic, chemical, nuclear, electromagnetic. Briefly, energy is the capacity to do work against a resistance, such as compressing a spring or a gas-filled cylinder, increasing the speed of a vehicle, making a chemical reaction go “uphill” in energy, pumping water uphill, etc. Nuclear energy is trickier to fathom, inherently involving concepts of general relativity. In any event, we can with some ease consider changes in energy – e.g., rearrangements in atomic nuclei releasing large quantities as heat or radiation (pure electromagnetic energy). In the Appendix I clarify (I hope) a number of concepts of force, energy, power, flux per area or per solid angle, all particularly relevant to our encounters with stars such as our Sun. A key facet is that types of energy can be converted into each other. Electromagnetic radiation such as light can be converted into heat; heat an be converted to mechanical motion, as in an automobile engine; mechanical energy is readily converted into heat via friction.

The interconversion of energy (or energy plus mass) is a theme throughout any discussion of how a star performs nuclear fusion, converts mass to heat and “light” in the most general sense, how radiation is again converted into heat at a planet, how heat get partly converted into the energy of wind and storms, and on and on. Some points of the science and of the philosophy of sciences are in a sidebar.

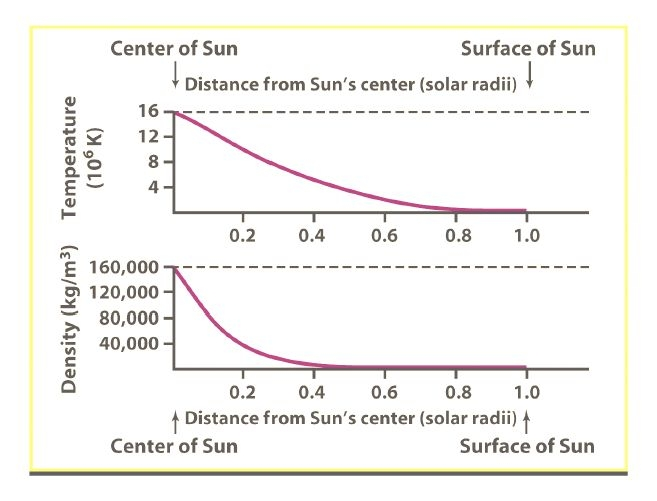

Making stars: the gravitational collapse of gases creates very high temperatures, to an estimated 15 million kelvin in the center of the Sun. (The kelvin is a unit of temperature the same size as the degree Celsius, only counted from a start at absolute zero, -273.16°). The Sun reached a fairly stable structure, with a hot center enabling actually rather slow nuclear fusion reactions, using up only less than a billionth of the mass each year; see below where I give a quick calculation that you make far more energy metabolically than the Sun does by nuclear fusion, per unit mass. The core is composed of mostly the two simplest chemical elements, hydrogen and helium, both fully ionized. All the atoms have had their electrons stripped off to bounce around, making a plasma of such electrons and the bare nuclei – protons, helium nuclei (combos of two protons with two neutrons), and a few heavier elements from the Sun’s origin.

Making stars: the gravitational collapse of gases creates very high temperatures, to an estimated 15 million kelvin in the center of the Sun. (The kelvin is a unit of temperature the same size as the degree Celsius, only counted from a start at absolute zero, -273.16°). The Sun reached a fairly stable structure, with a hot center enabling actually rather slow nuclear fusion reactions, using up only less than a billionth of the mass each year; see below where I give a quick calculation that you make far more energy metabolically than the Sun does by nuclear fusion, per unit mass. The core is composed of mostly the two simplest chemical elements, hydrogen and helium, both fully ionized. All the atoms have had their electrons stripped off to bounce around, making a plasma of such electrons and the bare nuclei – protons, helium nuclei (combos of two protons with two neutrons), and a few heavier elements from the Sun’s origin.

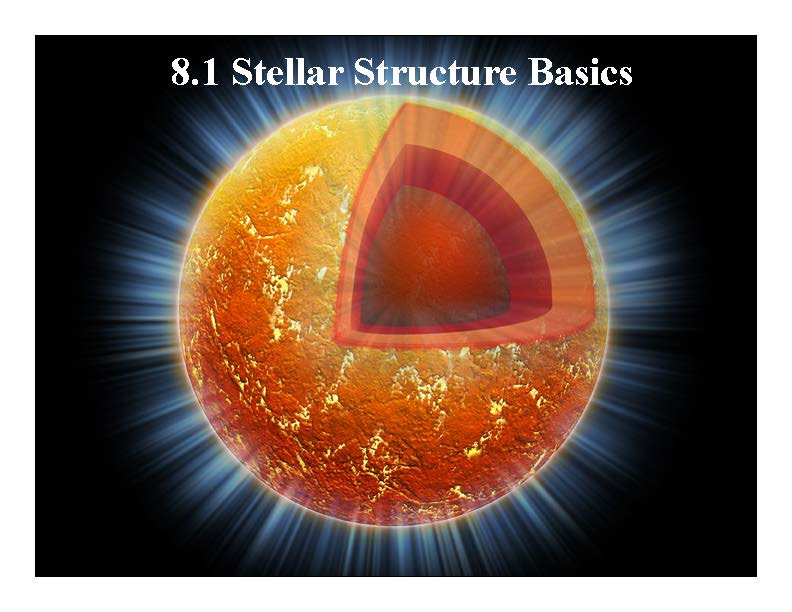

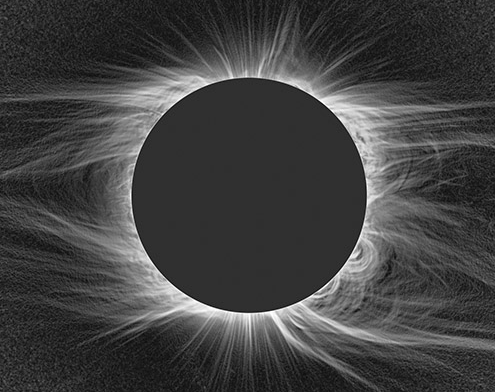

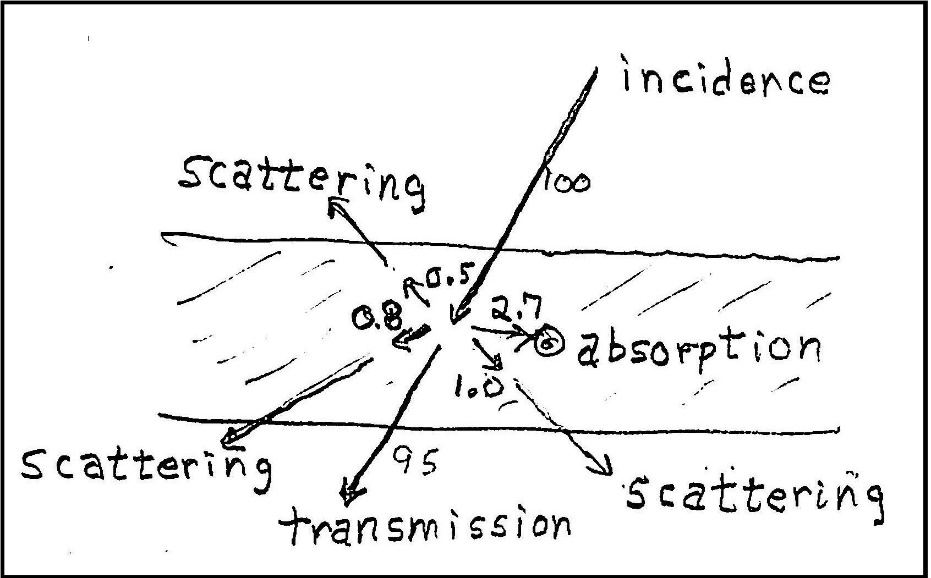

All the nuclear fusion and continued energy release occurs in the core. Surrounding this core is a “shell” or range of distances from the center over which electromagnetic radiation caroms around like a dense set of billiard balls – highly energetic gamma rays slowly being absorbed or scattered to create a wealth of less energetic rays, finally down to mostly light at the surface of the Sun. Surprisingly, this electromagnetic energy, moving at the speed of light, 300,000 km per second, should take only about 2 seconds to reach the surface, but it is estimated to take 170,000 years with all the bouncing back and around. The energetic particles of energy, the photons, readily encounter electrons and protons (and some helium nuclei) and get changed in direction by this. They take a “random walk” through the thick core, which is at the center about 180 time denser than water. With random moves, a photon can eventually get almost any distance from its start, but the number of steps taken and thus the time taken can be phenomenal. I have a Python code simulation that provides an estimate of the long journey of a photon.

The energy does slowly escape from the core of a star. There are three major ways. First is as radiation itself, the more or less tangled path just noted. Second is by convection, with the hot plasma or hot gas churning like water boiling on a stove, so that the hot gas later radiates its own energy, such as light. Third is by conduction without churning of a mass, as in heat moving from a spoon in hot coffee to your hand. Conduction is completely negligible in stars – it works over very short distances. Convection only becomes important when the radiation gets strongly absorbed and converted to heat. It occurs when the rate of change of temperature with distance is great enough; a mildly heated pot of water doesn’t churn. In the Sun outside the core the absorption of radiation is modest, until we look nearer the surface. There, electrons and nuclei have largely recombined into atoms, which have many ways to absorb radiation. Near the “surface” (a bit poorly defined for a mass of gas), the final disposition is mostly the emission of lots of light in a general sense – visible light (about 37% of the total), longer wavelength infrared (about 50%), and about 13% as more energetic ultraviolet radiation (see Fig. 1 earlier). Hotter stars, especially late in their lives, have a different structure, much less stable. For the story on all manner of stars, light, medium, and heavy, a great read if you get into physics is on the webpage of Caltech astronomer and “astroinformatician” George Djorgovski, http://www.astro.caltech.edu/~george/ay20/Ay20-Lec7x.pdf.

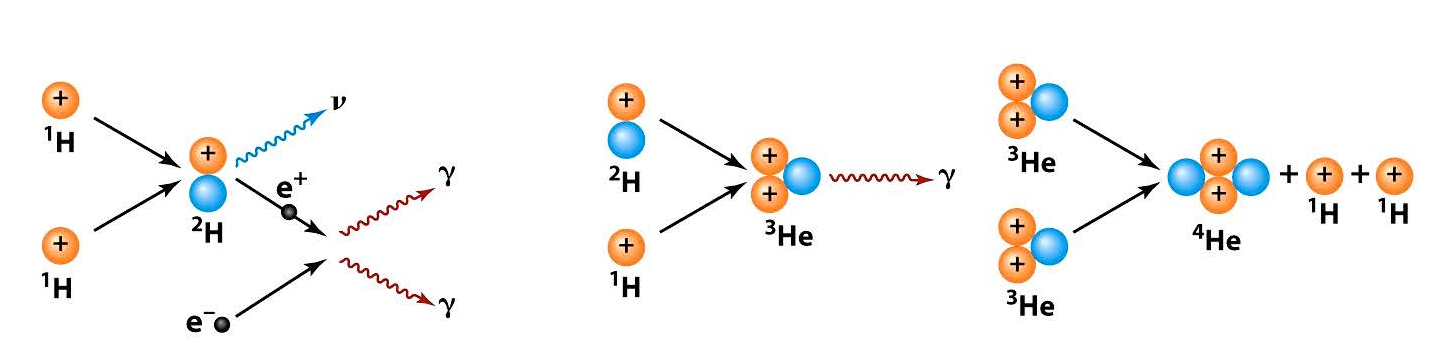

The nuclear reaction inside the Sun and similar “nice” stars that are stable and long-lived is principally the fusion of protons (hydrogen nuclei) into helium nuclei, for much of the star’s life. By a series of steps called the proton-proton chain, four protons turn into a helium nucleus. This is the main reaction inside the Sun.

Below, from Astronomy 122, N. Peter Armitage, Johns Hopkings University

The details of the p-p chain are engrossing. We’ll delve into these shortly while noting that the overall energy release of 4 protons (and 2 electrons) becoming a helium-4 nucleus is 26.72 million electron volts, or MeV. The energies involved in nuclear fusion are prodigious so we use several kinds of notation. One MeV expresses the energy released as the voltage that would accelerate an electron to that energy. Multiply the voltage by the charge of the electron, 1.602×10-19 coulombs to get metric energy units. Then, 1 MeV is 1.602×10-13 joules – a tiny amount of energy for a single particle, but, if we started with just 1 gram of protons, it would yield 640 billion joules. That’s about 178,000 kilowatt-hours! (If exponential notation such as 1.602×10-13 is unfamiliar, please check out another Appendix;) Another perspective on the energy release is that the kinetic energy of molecules bouncing around at room temperature near 27°C or 300K is minuscule in comparison, about 1/25th of a single electron-volt.

The following is a dive into some of the details of nuclear fusion that powers the Sun, and us. You may wish to cut to the chase to a summary.

The origin of the huge energy exchanges in fusion reactions is the great strength of the strong force that binds protons and neutrons together in nuclei. Very few of us alive now have any experience of that release, such as happened at Hiroshima and Nagasaki. Our more common experience with energetic chemical reactions is telling. One very energetic one-step reaction is the combination of two neutral hydrogen atoms to create a hydrogen molecule, H2. This releases 7.24×10-19 joules of energy, or 216,000 joules per gram of hydrogen. Nuclear fusion of one gram of hydrogen to 0.993 grams (0.7% loss of mass) of helium releases 640 billion joules, 3 million times greater. The famous formula of Einstein, E = mc2, then explains the enormity of the energy release. That energy is embodied in three forms. First is the set of gamma rays emitted at the fusing nuclei, with energies of 0.511 MeV (4 of the gammas) and 5.49 MeV (the other 2 gammas). Second is the kinetic energy of the new nuclei rebounding from the reactions: 0.420 MeV for the first step (occurring twice) and 12.86 MeV for the final step. Their collisions with other nuclei amount to the sharp decelerations and accelerations of their electrical charges, which generates electromagnetic radiation – more gammas. Third is the energy carried away by the neutrino (denoted by the Greek letter nu, ν), about 0.5 MeV. The total energy release is then 26.72 MeV. Most of that comes out as heat and thus light (and ultraviolet and infrared radiation); the neutrinos zip out of the Sun with about 1 chance in 100 billion of being captured to heat the Sun. They are the weirdest particles, interacting so very weakly with all other matter that they could traverse light-years of lead before being absorbed. There’s more to say about them later and in a Sidebar.

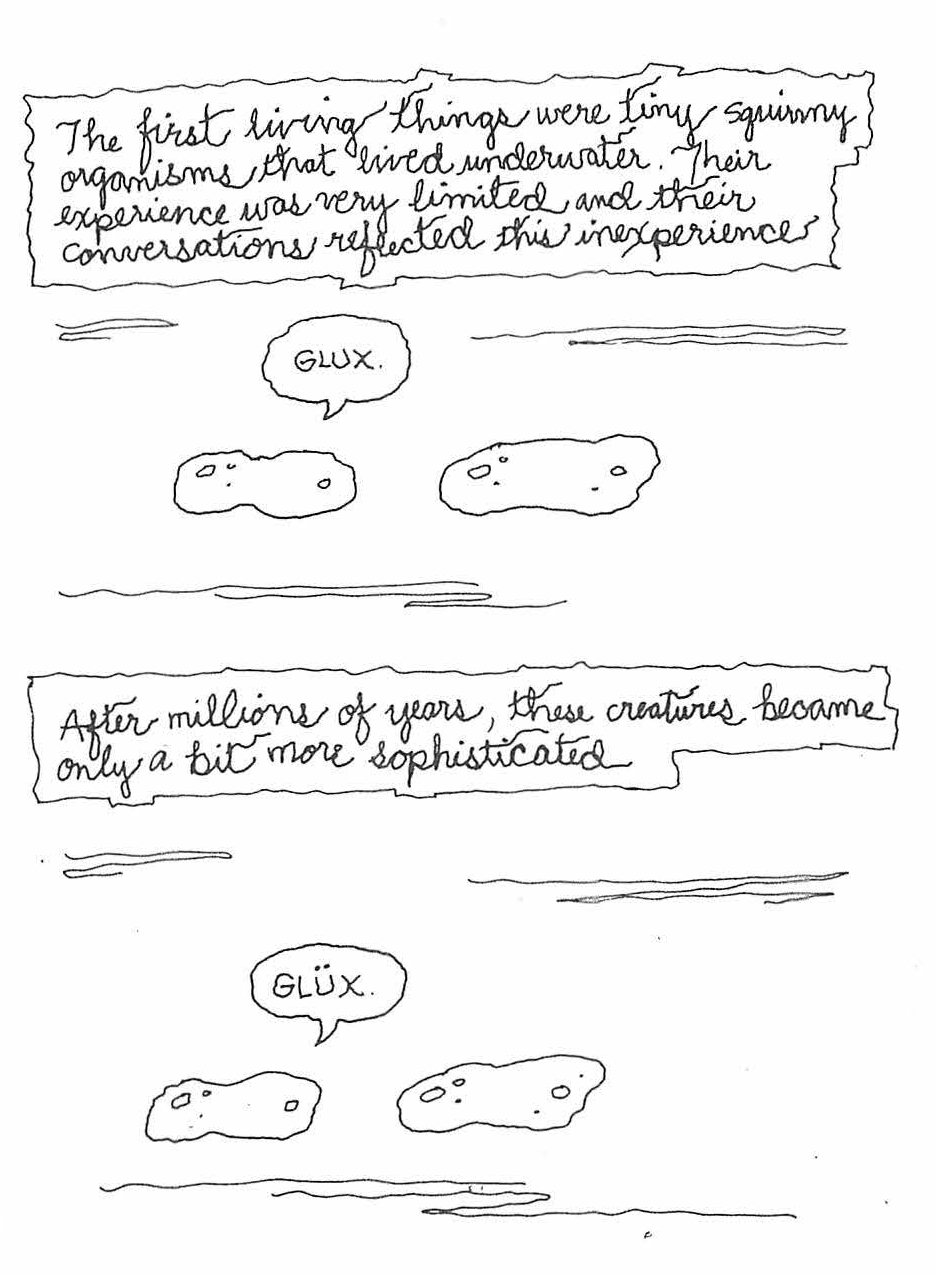

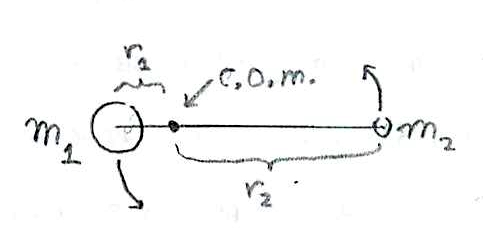

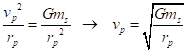

The first step: two protons create a deuteron and a positron. This is the leftmost part of the sketch above. It turns out to happen at an extremely slow rate, such that the average proton lasts 9 billion years before fusing! Good thing for us, giving the Sun a long lifetime and leaving enough time for evolution. One major part of the slowness is the difficulty of getting two protons close enough to fuse. The protons repel each other very strongly via their electrical charges. The nuclear force can only overwhelm the electrical repulsion between the two protons to fuse them together when they approach within a distance about the size of the protons themselves, about one femtometer, 10-15m. That’s 1/100,000th the size of a typical atom. At any separation of the protons, r, the electrical potential energy is proportional to 1/r. The formula is ![]() . Here, e is the charge of the electron and ε0 is a fundamental constant called the permittivity of free space. Plugging in the numerical values (1.6×10-19 coulombs and 8.85×10-12 farads per meter) we get 2.31×10-13 joules. A “small” number, but that’s (1) equivalent to 1.43 MeV and (2) the average kinetic energy of a proton at a temperature of 11 billion kelvin! The temperature of the core of the Sun has been confidently modeled (a long story) at 15 million kelvin, way short by a factor of about 700!

. Here, e is the charge of the electron and ε0 is a fundamental constant called the permittivity of free space. Plugging in the numerical values (1.6×10-19 coulombs and 8.85×10-12 farads per meter) we get 2.31×10-13 joules. A “small” number, but that’s (1) equivalent to 1.43 MeV and (2) the average kinetic energy of a proton at a temperature of 11 billion kelvin! The temperature of the core of the Sun has been confidently modeled (a long story) at 15 million kelvin, way short by a factor of about 700!

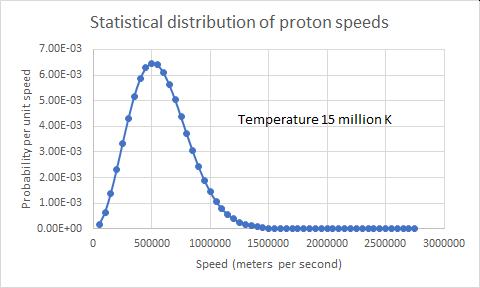

Maxwell, Boltzmann, and quantum tunneling to the rescue. Of course, some protons are moving faster than average. Let’s look at the speed (note: velocity taken precisely means speed with a specified direction; I’ve used it a bit loosely up to here). The average energy of motion for

![]()

particles at a given proportional to the absolute temperature: E =. Here, k is Boltzmann’s

constant, 1.381×10-23 joules per kelvin. At the Sun’s core temperature of 15 MK (millions of kelvin), that energy for a single proton is 2.31×10-13 joules. We can convert that to an equivalent electrical potential accelerating an electron, by dividing the energy by the charge of the electron. The potential is about 2000 volts (V). Some particles get fortuitous bounces to higher speed. By the very intriguing principles of statistical mechanics, we can figure out the probability of the protons having higher energy. We look at the “Maxwell-Boltzmann distribution” of energy in a large number of particles that have settled down by collisions to reach a defined temperature. (Ludwig Boltzmann really got around, didn’t he?)

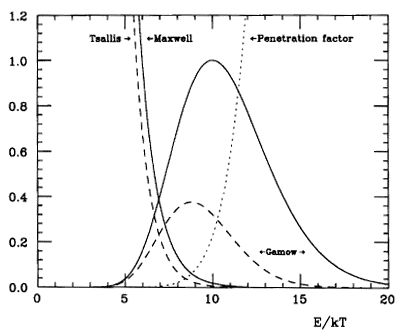

The probability of an individual particle having a kinetic energy E when the group average is Eaverage is proportional to the negative exponential exp(-E/Eaverage). The relative chance of finding a particle with an energy, E, 700 times the average energy, Eaverage, is e-700, which is far, far less than 1 proton in the entire Sun. It turns out that protons moving at only 7x the average energy do pretty well to drive fusion… in the sense that they are the ones responsible for most fusion reactions. The fraction of protons moving at this energy or higher is about 0.12%. They’re plentiful, but that’s not good enough; the fusion process is frustrated by the need to invoke, as it were, the weak force. We’ll see that, shortly. Caveat: the core of the Sun is a fully ionized plasma of mostly protons and electrons. It doesn’t strictly meet the conditions for it protons to race around with the distribution of speeds of the Maxwell-Boltzmann distribution, but it’s “close” [https://www.scielo.br/scielo.php?script=sci_arttext&pid=S0103-97331999000100014]. The more exact distribution has been calculated.

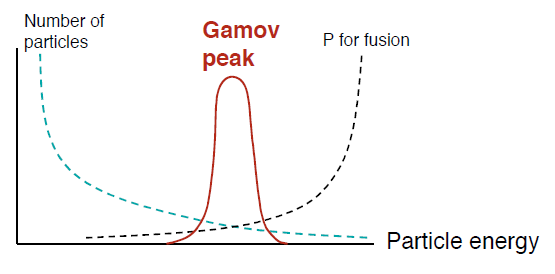

George Gamow realized that there is a “useful” probability of fusion with protons moving at only about 7 times the average energy. In quantum mechanics, particles also act as waves. Coming to a barrier higher than their energy, the wave still leaks or “tunnels” into and past the barrier weakly but assuredly. This phenomenon is well documented in many cases, including the technology of tunnel diodes in electronics. Gamow estimated the probability of tunneling with a formula that looks like the uncertainty relation – a particle can’t be simultaneously “pinned down” for both its position and momentum. The product of (uncertainty in position) x (uncertainty in momentum) always equals or exceeds the value of Planck’s constant, h, multiplied by a small numerical factor. The formula for the probability that the proton tunnels through the barrier of electronic repulsion to get close enough to the other proton for fusion, is exp(-e2/( ε0 h v)). Here, v is the speed of the collision. A proton at 6.7 times the average energy of the core temperature is moving at about 1.6 million meters per second! The probability of tunneling is then about 76 in a million collisions. We may summarize the story of proton speed and quantum tunneling with a couple of complementary diagrams:

Stephen Smartt, Univ. of Toledo

M. Coraddou et al., Brazilian J. Physics 29 (1999)

The faster a proton is moving, the higher the probability that it will tunnel though the “Coulomb barrier” to get to the other proton. At the same time, the probability of finding such a fast proton declines rapidly with that speed. It’s the product of both probabilities that counts. This total probability peaks at intermediate proton speeds. The peak is at the “most effective energy,” while there are contributions to fusion over a moderate range of energies.

However, it’s far from enough for protons to have extremely frequent collisions and a high probability of protons tunneling close to each other! If a bit over a tenth of a percent of protons had this favorable energy and each of these had a probability of 6% of tunneling close enough to another proton for fusion, then a bit less than one ten-thousandths of the protons could fuse. Protons at the density of the Sun’s core average being spaced apart at about 22,000 femtometers (2.2×10-11 m) while these energetic ones move about 1.6 million meters per second. The energetic protons should collide about (speed)/(distance) units of time, or 70 quadrillion times per second! However, they’re successful only about one time in 9 billion years; that’s one in about 20 septillion times (2×1025)!

The weak interaction slows down the first fusion or two protons, enormously. The Sun only burns up about 3.5 quintillionths of its mass of hydrogen per second. Of course, that’s still 600 million tonnes. The reason for the low rate is that the fusion reaction requires the action of the weak force. A proton has to turn into a neutron and a neutrino with the help of the weak force. The name “weak” is very appropriate. Two protons have to bring in, as it were, the help of a neutrino that only reacts extremely weakly with other matter. Emitting a neutrino in a reaction is the converse of capturing a neutrino, and capturing one is an extremely rare process – hence, the idea that a neutrino has an even chance of being captured only after traversing some light-years’ thickness of dense lead!

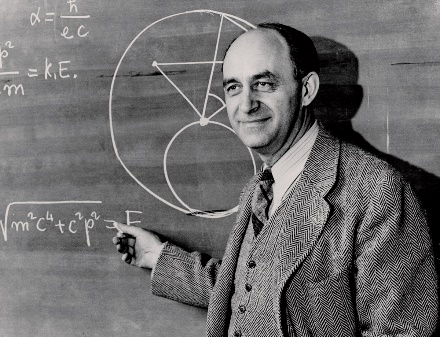

Side note: Fusion with the release of a neutrino is a variant of sorts on the spontaneous decay of some unstable nuclei in what’s called beta decay. For example, one nucleus of the isotope of the fairly common heavy element thorium, thorium-234, decays to the element protactinium-234 by emitting an electron, called a beta particle for historic reasons, and a neutrino. This is called beta decay. It was hard to explain in terms of known physics until the polymath and physicist Enrico Fermi developed a very successful theory of beta decay. Fermi attended a Hitchcock lecture at Berkeley, in which one student of J. Robert Oppenheimer used elegant quantum theory. Afterward, he told fellow physicist Emilio Segre, “I went to their seminar and was depressed by my inability to understand them [Oppenheimer’s students]. Only the last sentence cheered me up; it was ‘And this is Fermi’s theory of beta decay.’ “ Excess humility, sly humor? I think the latter about this super-genius.

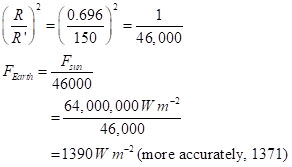

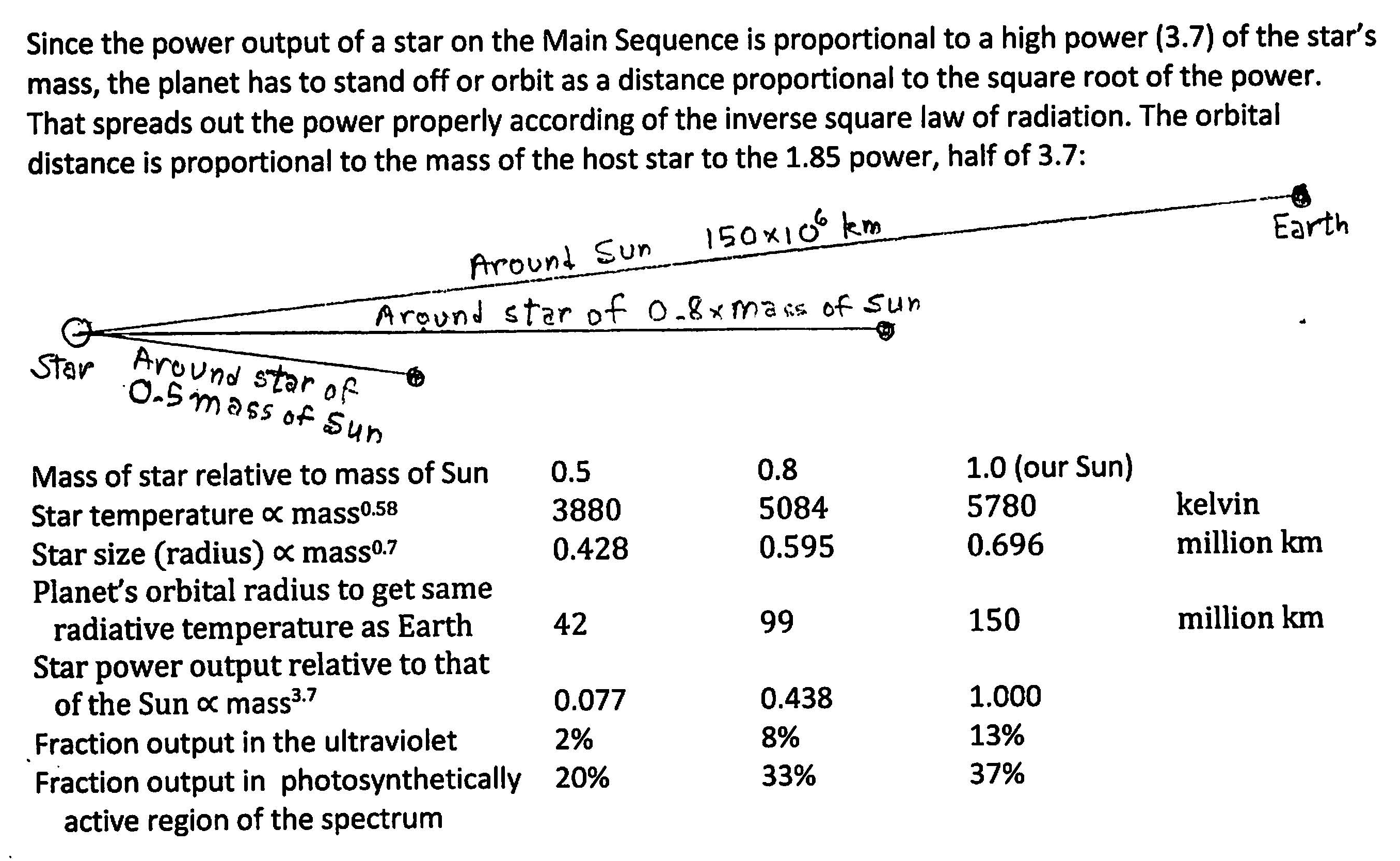

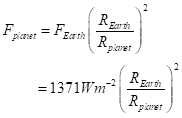

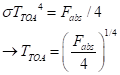

The neutrinos are very quizzical particles. First, we note that they carry off about 1.9% of the energy of the reaction, which is another 0.53 MeV that should be debited to the 26.72 MeV in the equation above. They really do carry the energy away. They interact so weakly with ordinary matter that they exit the Sun without leaving their energy there (thus, not helping heat our planet, either). That weak interaction expresses the converse property to that showing up in fusion: hard to make hard to catch. While a gamma ray inside the Sun may travel some millionths of a meter before interacting with an electron or other charged particle, a “typical” neutrino has only an even chance of being absorbed by matter if that matter is, say, the amount encountered in moving through several light-years of lead. Neutrinos are truly weird dudes; their properties and even their connection to why any matter exists at all are explored in a sidebar. Their mere existence was postulated by Enrico Fermi decades before there were instruments to detect them. They had to exist in order for energy and momentum both to be conserved in the nuclear reaction!